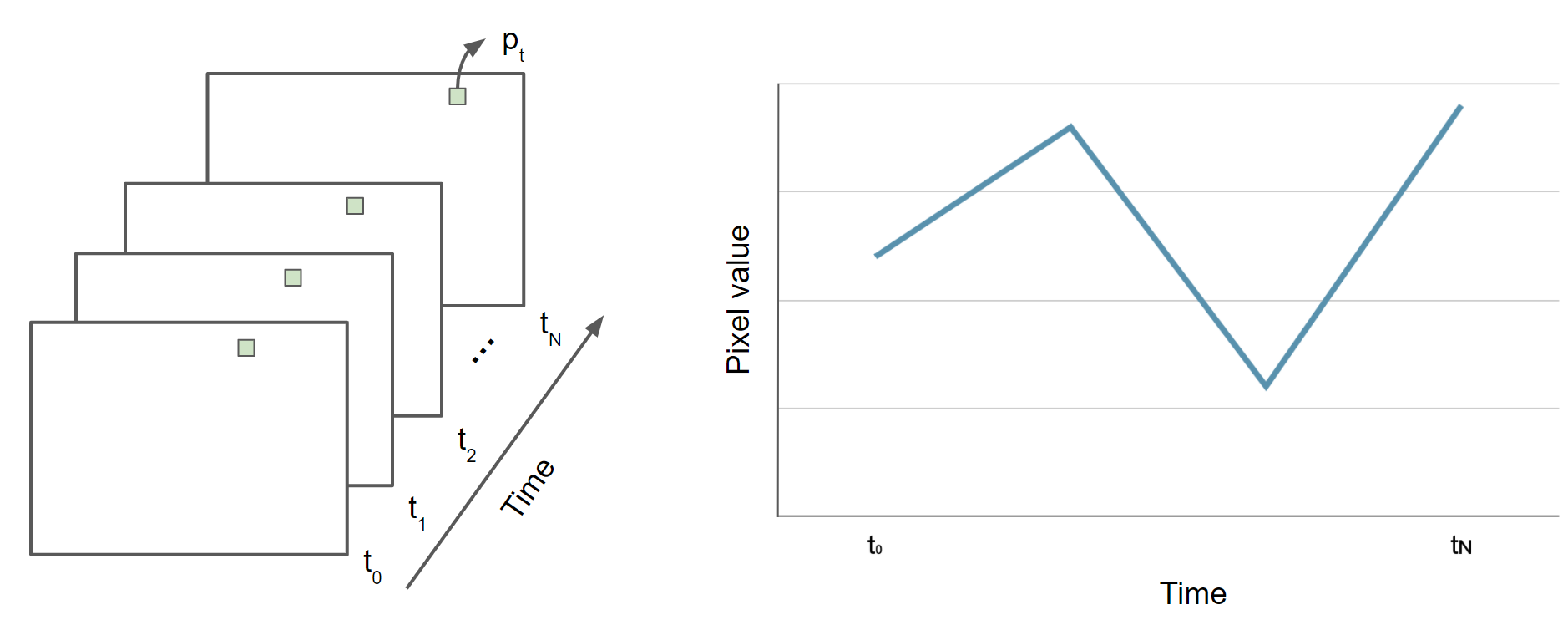

Image Series

One of the paradigm-changing features of Earth Engine is the ability to access decades of imagery without the previous limitation of needing to download all the data to a local disk for processing. Because remote-sensing data files can be enormous, this used to limit many projects to viewing two or three images from different periods. With Earth Engine, users can access tens or hundreds of thousands of images to understand the status of places across decades.

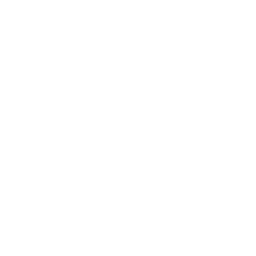

Filter, Map, Reduce

Overview

The purpose of this chapter is to teach you important programming concepts as they are applied in Earth Engine. We first illustrate how the order and type of these operations can matter with a real-world, non-programming example. We then demonstrate these concepts with an ImageCollection, a key data type that distinguishes Earth Engine from desktop image-processing implementations.

Learning Outcomes

- Visualizing the concepts of filtering, mapping, and reducing with a hypothetical, non-programming example.

- Gaining context and experience with filtering an ImageCollection.

- Learning how to efficiently map a user-written function over the images of a filtered ImageCollection.

- Learning how to summarize a set of assembled values using Earth Engine reducers.

Assumes you know how to:

- Import images and image collections, filter, and visualize (Part F1).

- Perform basic image analysis: select bands, compute indices, create masks (Part F2).

Introduction

Prior chapters focused on exploring individual images—for example, viewing the characteristics of single satellite images by displaying different combinations of bands (Chap. F1.1), viewing single images from different datasets (Chap. F1.2, Chap. F1.3), and exploring image processing principles (Parts F2, F3) as they are implemented for cloud-based remote sensing in Earth Engine. Each image encountered in those chapters was pulled from a larger assemblage of images taken from the same sensor. The chapters used a few ways to narrow down the number of images in order to view just one for inspection (Part F1) or manipulation (Part F2, Part F3).

In this chapter and most of the chapters that follow, we will move from the domain of single images to the more complex and distinctive world of working with image collections, one of the fundamental data types within Earth Engine. The ability to conceptualize and manipulate entire image collections distinguishes Earth Engine and gives it considerable power for interpreting change and stability across space and time.

When looking for change or seeking to understand differences in an area through time, we often proceed through three ordered stages, which we will color code in this first explanatory part of the lab:

- Filter: selecting subsets of images based on criteria of interest.

- Map: manipulating each image in a set in some way to suit our goals. and

- Reduce: estimating characteristics of the time series.

For users of other programming languages—R, MATLAB, C, Karel, and many others—this approach might seem awkward at first. We explain it below with a non-programming example: going to the store to buy milk.

Suppose you need to go shopping for milk, and you have two criteria for determining where you will buy your milk: location and price. The store needs to be close to your home, and as a first step in deciding whether to buy milk today, you want to identify the lowest price among those stores. You don’t know the cost of milk at any store ahead of time, so you need to efficiently contact each one and determine the minimum price to know whether it fits in your budget. If we were discussing this with a friend, we might say, “I need to find out how much milk costs at all the stores around here.” To solve that problem in a programming language, these words imply precise operations on sets of information. We can write the following “pseudocode,” which uses words that indicate logical thinking but that cannot be pasted directly into a program:

AllStoresOnEarth.filterNearbyStores.filterStoresWithMilk.getMilkPricesFromEachStore.determineTheMinimumValueImagine doing these actions not on a computer but in a more old-fashioned way: calling on the telephone for milk prices, writing the milk prices on paper, and inspecting the list to find the lowest value. In this approach, we begin with AllStoresOnEarth, since there is at least some possibility that we could decide to visit any store on Earth, a set that could include millions of stores, with prices for millions or billions of items. A wise first action would be to limit ourselves to nearby stores. Asking to filterNearbyStores would reduce the number of potential stores to hundreds, depending on how far we are willing to travel for milk. Then, working with that smaller set, we further filterStoresWithMilk, limiting ourselves to stores that sell our target item. At that point in the filtering, imagine that just 10 possibilities remain. Then, by telephone, we getMilkPricesFromEachStore, making a short paper list of prices. We then scan the list to determineTheMinimumValue to decide which store to visit.

In that example, each color plays a different role in the workflow. The AllStoresOnEarth set, any one of which might contain inexpensive milk, is an enormous collection. The filtering actions filterNearbyStores and filterStoresWithMilk are operations that can happen on any set of stores. These actions take a set of stores, do some operation to limit that set, and return that smaller set of stores as an answer. The action to getMilkPricesFromEachStore takes a simple idea—calling a store for a milk price—and “maps” it over a given set of stores. Finally, with the list of nearby milk prices assembled, the action to determineTheMinimumValue, a general idea that could be applied to any list of numbers, identifies the cheapest one.

The list of steps above might seem almost too obvious, but the choice and order of operations can have a big impact on the feasibility of the problem. Imagine if we had decided to do the same operations in a slightly different order:

AllStoresOnEarth.filterStoresWithMilk.getMilkPricesFromEachStore.filterNearbyStores.determineMinimumValueIn this approach, we first identify all the stores on Earth that have milk, then contact them one by one to get their current milk price. If the contact is done by phone, this could be a painfully slow process involving millions of phone calls. It would take considerable “processing” time to make each call, and careful work to record each price onto a giant list. Processing the operations in this order would demand that only after entirely finishing the process of contacting every milk proprietor on Earth, we then identify the ones on our list that are not nearby enough to visit, then scan the prices on the list of nearby stores to find the cheapest one. This should ultimately give the same answer as the more efficient first example, but only after requiring so much effort that we might want to give up.

In addition to the greater order of magnitude of the list size, you can see that there are also possible slow points in the process. Could you make a million phone calls yourself? Maybe, but it might be pretty appealing to hire, say, 1000 people to help. While being able to make a large number of calls in parallel would speed up the calling stage, it’s important to note that you would need to wait for all 1000 callers to return their sublists of prices. Why wait? Nearby stores could be on any caller’s sublist, so any caller might be the one to find the lowest nearby price. The identification of the lowest nearby price would need to wait for the slowest caller, even if it turned out that all of that last caller’s prices came from stores on the other side of the world.

This counterexample would also have other complications—such as the need to track store locations on the list of milk prices—that could present serious problems if you did those operations in that unwise order. For now, the point is to filter, then map, then reduce. Below, we’ll apply these concepts to image collections.

Filtering Image Collections in Earth Engine

The first part of the filter, map, reduce paradigm is “filtering” to get a smaller ImageCollection from a larger one. As in the milk example, filters take a large set of items, limit it by some criterion, and return a smaller set for consideration. Here, filters take an ImageCollection, limit it by some criterion of date, location, or image characteristics, and return a smaller ImageCollection (Fig. F4.0.1).

As described first in Chap. F1.2, the Earth Engine API provides a set of filters for the ImageCollection type. The filters can limit an ImageCollection based on spatial, temporal, or attribute characteristics. Filters were used in Parts F1, F2, and F3 without much context or explanation, to isolate an image from an ImageCollection for inspection or manipulation. The information below should give perspective on that work while introducing some new tools for filtering image collections.

Below are three examples of limiting a Landsat 5 ImageCollection by characteristics and assessing the size of the resulting set.

FilterDate This takes an ImageCollection as input and returns an ImageCollection whose members satisfy the specified date criteria. We’ll adapt the earlier filtering logic seen in Chap. F1.2:

var imgCol = ee.ImageCollection('LANDSAT/LT05/C02/T1_L2');

// How many Tier 1 Landsat 5 images have ever been collected?

print("All images ever: ", imgCol.size()); // A very large number

// How many images were collected in the 2000s?

var startDate = '2000-01-01';

var endDate = '2010-01-01';

var imgColfilteredByDate = imgCol.filterDate(startDate, endDate);

print("All images 2000-2010: ", imgColfilteredByDate.size());

// A smaller (but still large) number

After running the code, you should get a very large number for the full set of images. You also will likely get a very large number for the subset of images over the decade-scale interval.

FilterBounds It may be that—similar to the milk example—only images near to a place of interest are useful for you. As first presented in Part F1, filterBounds takes an ImageCollection as input and returns an ImageCollection whose images surround a specified location. If we take the ImageCollection that was filtered by date and then filter it by bounds, we will have filtered the collection to those images near a specified point within the specified date interval. With the code below, we’ll count the number of images in the Shanghai vicinity, first visited in Chap. F1.1, from the early 2000s:

var ShanghaiImage = ee.Image( 'LANDSAT/LT05/C02/T1_L2/LT05_118038_20000606');

Map.centerObject(ShanghaiImage, 9);

var imgColfilteredByDateHere = imgColfilteredByDate.filterBounds(Map .getCenter());

print("All images here, 2000-2010: ", imgColfilteredByDateHere

.size()); // A smaller numberIf you’d like, you could take a few minutes to explore the behavior of the script in different parts of the world. To do that, you would need to comment out the Map.centerObject command to keep the map from moving to that location each time you run the script.

Filter by Other Image Metadata As first explained in Chap. F1.3, the date and location of an image are characteristics stored with each image. Another important factor in image processing is the cloud cover, an image-level value computed for each image in many collections, including the Landsat and Sentinel-2 collections. The overall cloudiness score might be stored under different metadata tag names in different data sets. For example, for Sentinel-2, this overall cloudiness score is stored in the CLOUDY_PIXEL_PERCENTAGE metadata field. For Landsat 5, the ImageCollection we are using in this example, the image-level cloudiness score is stored using the tag CLOUD_COVER. If you are unfamiliar with how to find this information, these skills are first presented in Part F1.

Here, we will access the ImageCollection that we just built using filterBounds and filterDate, and then further filter the images by the image-level cloud cover score, using the filterMetadata function.

Next, let’s remove any images with 50% or more cloudiness. As will be described in subsequent chapters working with per-pixel cloudiness information, you might want to retain those images in a real-life study, if you feel some values within cloudy images might be useful. For now, to illustrate the filtering concept, let’s keep only images whose image-level cloudiness values indicate that the cloud coverage is lower than 50%. Here, we will take the set already filtered by bounds and date, and further filter it using the cloud percentage into a new ImageCollection. Add this line to the script to filter by cloudiness and print the size to the Console.

var L5FilteredLowCloudImages = imgColfilteredByDateHere

.filterMetadata('CLOUD_COVER', 'less_than', 50);

print("Less than 50% clouds in this area, 2000-2010",

L5FilteredLowCloudImages.size()); // A smaller numberFiltering in an Efficient Order As you saw earlier in the hypothetical milk example, we typically filter, then map, and then reduce, in that order. In the same way that we would not want to call every store on Earth, preferring instead to narrow down the list of potential stores first, we filter images first in our workflow in Earth Engine. In addition, you may have noticed that the ordering of the filters within the filtering stage also mattered in the milk example. This is also true in Earth Engine. For problems with a non-global spatial component in which filterBounds is to be used, it is most efficient to do that spatial filtering first.

In the code below, you will see that you can “chain” the filter commands, which are then executed from left to right. Below, we chain the filters in the same order as you specified above. Note that it gives an ImageCollection of the same size as when you applied the filters one at a time.

var chainedFilteredSet = imgCol.filterDate(startDate, endDate)

.filterBounds(Map.getCenter())

.filterMetadata('CLOUD_COVER', 'less_than', 50);

print('Chained: Less than 50% clouds in this area, 2000-2010',

chainedFilteredSet.size());In the code below, we chain the filters in a more efficient order, implementing filterBounds first. This, too, gives an ImageCollection of the same size as when you applied the filters in the less efficient order, whether the filters were chained or not.

var efficientFilteredSet = imgCol.filterBounds(Map.getCenter())

.filterDate(startDate, endDate)

.filterMetadata('CLOUD_COVER', 'less_than', 50);

print('Efficient filtering: Less than 50% clouds in this area, 2000-2010',

efficientFilteredSet.size());Each of the two chained sets of operations will give the same result as before for the number of images. While the second order is more efficient, both approaches are likely to return the answer to the Code Editor at roughly the same time for this very small example. The order of operations is most important in larger problems in which you might be challenged to manage memory carefully. As in the milk example in which you narrowed geographically first, it is good practice in Earth Engine to order the filters with the filterBounds first, followed by metadata filters in order of decreasing specificity.

Code Checkpoint F40a. The book’s repository contains a script that shows what your code should look like at this point.

Now, with an efficiently filtered collection that satisfies our chosen criteria, we will next explore the second stage: executing a function for all of the images in the set.

Mapping over Image Collections in Earth Engine

In Chap. F3.1, we calculated the Enhanced Vegetation Index (EVI) in very small steps to illustrate band arithmetic on satellite images. In that chapter, code was called once, on a single image. What if we wanted to compute the EVI in the same way for every image of an entire ImageCollection? Here, we use the key tool for the second part of the workflow in Earth Engine, a .map command (Fig. F4.0.1). This is roughly analogous to the step of making phone calls in the milk example that began this chapter, in which you took a list of store names and transformed it through effort into a list of milk prices.

Before beginning to code the EVI functionality, it’s worth noting that the word “map” is encountered in multiple settings during cloud-based remote sensing, and it’s important to be able to distinguish the uses. A good way to think of it is that “map” can act as a verb or as a noun in Earth Engine. There are two uses of “map” as a noun. We might refer casually to “the map,” or more precisely to “the Map panel”; these terms refer to the place where the images are shown in the code interface. A second way “map” is used as a noun is to refer to an Earth Engine object, which has functions that can be called on it. Examples of this are the familiar Map.addLayer and Map.setCenter. Where that use of the word is intended, it will be shown in purple text and capitalized in the Code Editor. What we are discussing here is the use of .map as a verb, representing the idea of performing a set of actions repeatedly on a set. This is typically referred to as “mapping over the set.”

To map a given set of operations efficiently over an entire ImageCollection, the processing needs to be set up in a particular way. Users familiar with other programming languages might expect to see “loop” code to do this, but the processing is not done exactly that way in Earth Engine. Instead, we will create a function, and then map it over the ImageCollection. To begin, envision creating a function that takes exactly one parameter, an ee.Image. The function is then designed to perform a specified set of operations on the input ee.Image and then, importantly, returns an ee.Image as the last step of the function. When we map that function over an ImageCollection, as we’ll illustrate below, the effect is that we begin with an ImageCollection, do operations to each image, and receive a processed ImageCollection as the output.

What kinds of functions could we create? For example, you could imagine a function taking an image and returning an image whose pixels have the value 1 where the value of a given band was lower than a certain threshold, and 0 otherwise. The effect of mapping this function would be an entire ImageCollection of images with zeroes and ones representing the results of that test on each image. Or you could imagine a function computing a complex self-defined index and sending back an image of that index calculated in each pixel. Here, we’ll create a function to compute the EVI for any input Landsat 5 image and return the one-band image for which the index is computed for each pixel. Copy and paste the function definition below into the Code Editor, adding it to the end of the script from the previous section.

var makeLandsat5EVI = function(oneL5Image) {

// compute the EVI for any Landsat 5 image. Note it's specific to

// Landsat 5 images due to the band numbers. Don't run this exact

// function for images from sensors other than Landsat 5.

// Extract the bands and divide by 1e4 to account for scaling done.

var nirScaled = oneL5Image.select('SR_B4').divide(10000);

var redScaled = oneL5Image.select('SR_B3').divide(10000);

var blueScaled = oneL5Image.select('SR_B1').divide(10000);

// Calculate the numerator, note that order goes from left to right.

var numeratorEVI = (nirScaled.subtract(redScaled)).multiply(

2.5);

// Calculate the denominator

var denomClause1 = redScaled.multiply(6);

var denomClause2 = blueScaled.multiply(7.5);

var denominatorEVI = nirScaled.add(denomClause1).subtract(

denomClause2).add(1);

// Calculate EVI and name it.

var landsat5EVI = numeratorEVI.divide(denominatorEVI).rename(

'EVI');

return (landsat5EVI);

};It is worth emphasizing that, in general, band names are specific to each ImageCollection. As a result, if that function were run on an image without the band ‘SR_B4’, for example, the function call would fail. Here, we have emphasized in the function’s name that it is specifically for creating EVI for Landsat 5.

The function makeLandsat5EVI is built to receive a single image, select the proper bands for calculating EVI, make the calculation, and return a one-banded image. If we had the name of each image comprising our ImageCollection, we could enter the names into the Code Editor and call the function one at a time for each, assembling the images into variables, and then combining them into an ImageCollection. This would be very tedious and highly prone to mistakes: lists of items might get mistyped, an image might be missed, etc. Instead, as mentioned above, we will use .map. With the code below, let’s print the information about the cloud-filtered collection and display it, execute the .map command, and explore the resulting ImageCollection.

var L5EVIimages = efficientFilteredSet.map(makeLandsat5EVI);

print('Verifying that the .map gives back the same number of images: ',

L5EVIimages.size());

print(L5EVIimages);

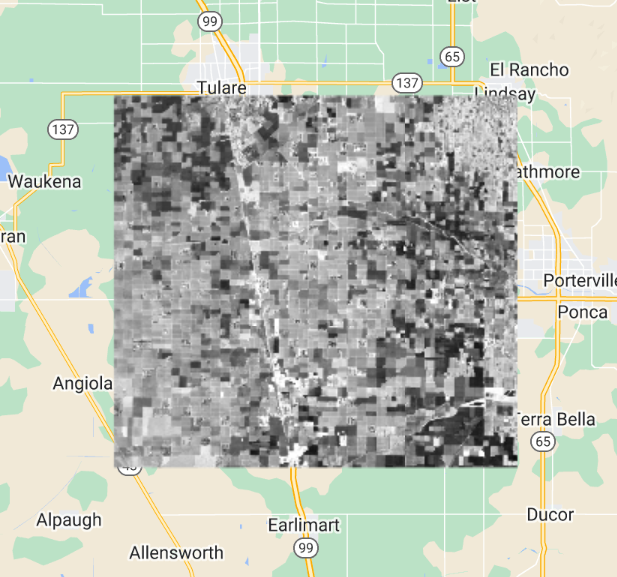

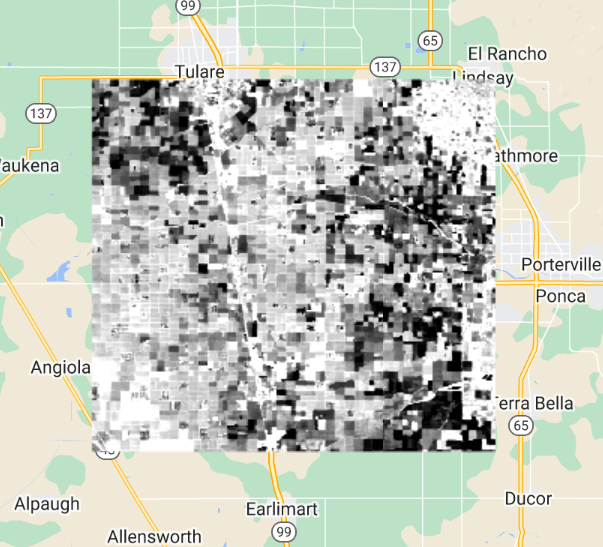

Map.addLayer(L5EVIimages, {}, 'L5EVIimages', 1, 1);After entering and executing this code, you will see a grayscale image. If you look closely at the edges of the image, you might spot other images drawn behind it in a way that looks somewhat like a stack of papers on a table. This is the drawing of the ImageCollection made from the makeLandsat5EVI function. You can select the Inspector panel and click on one of the grayscale pixels to view the values of the entire ImageCollection. After clicking on a pixel, look for the Series tag by opening and closing the list of items. When you open that tag, you will see a chart of the EVI values at that pixel, created by mapping the makeLandsat5EVI function over the filtered ImageCollection.

Code Checkpoint F40b. The book’s repository contains a script that shows what your code should look like at this point.

Reducing an Image Collection

The third part of the filter, map, reduce paradigm is “reducing” values in an ImageCollection to extract meaningful values (Fig. F4.0.1). In the milk example, we reduced a large list of milk prices to find the minimum value. The Earth Engine API provides a large set of reducers for reducing a set of values to a summary statistic.

Here, you can think of each location, after the calculation of EVI has been executed though the .map command, as having a list of EVI values on it. Each pixel contains a potentially very large set of EVI values; the stack might be 15 items high in one location and perhaps 200, 2000, or 200,000 items high in another location, especially if a looser set of filters had been used.

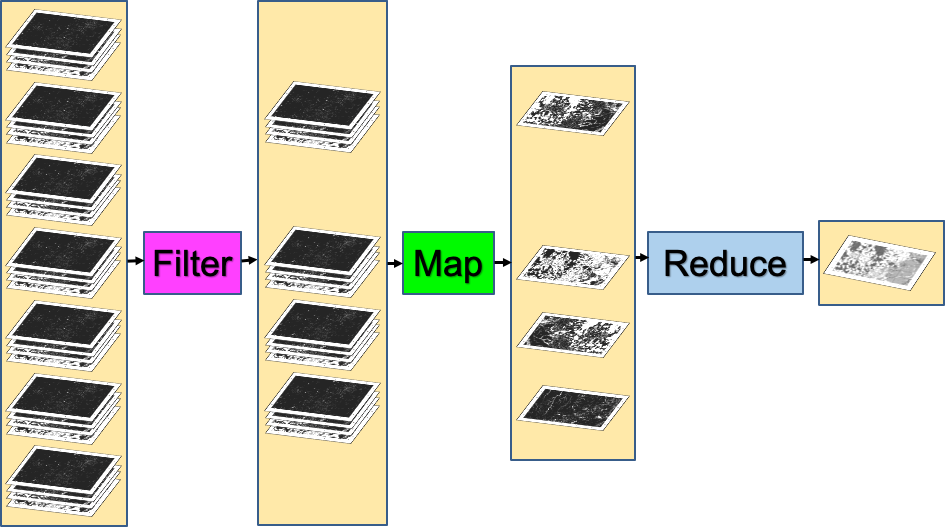

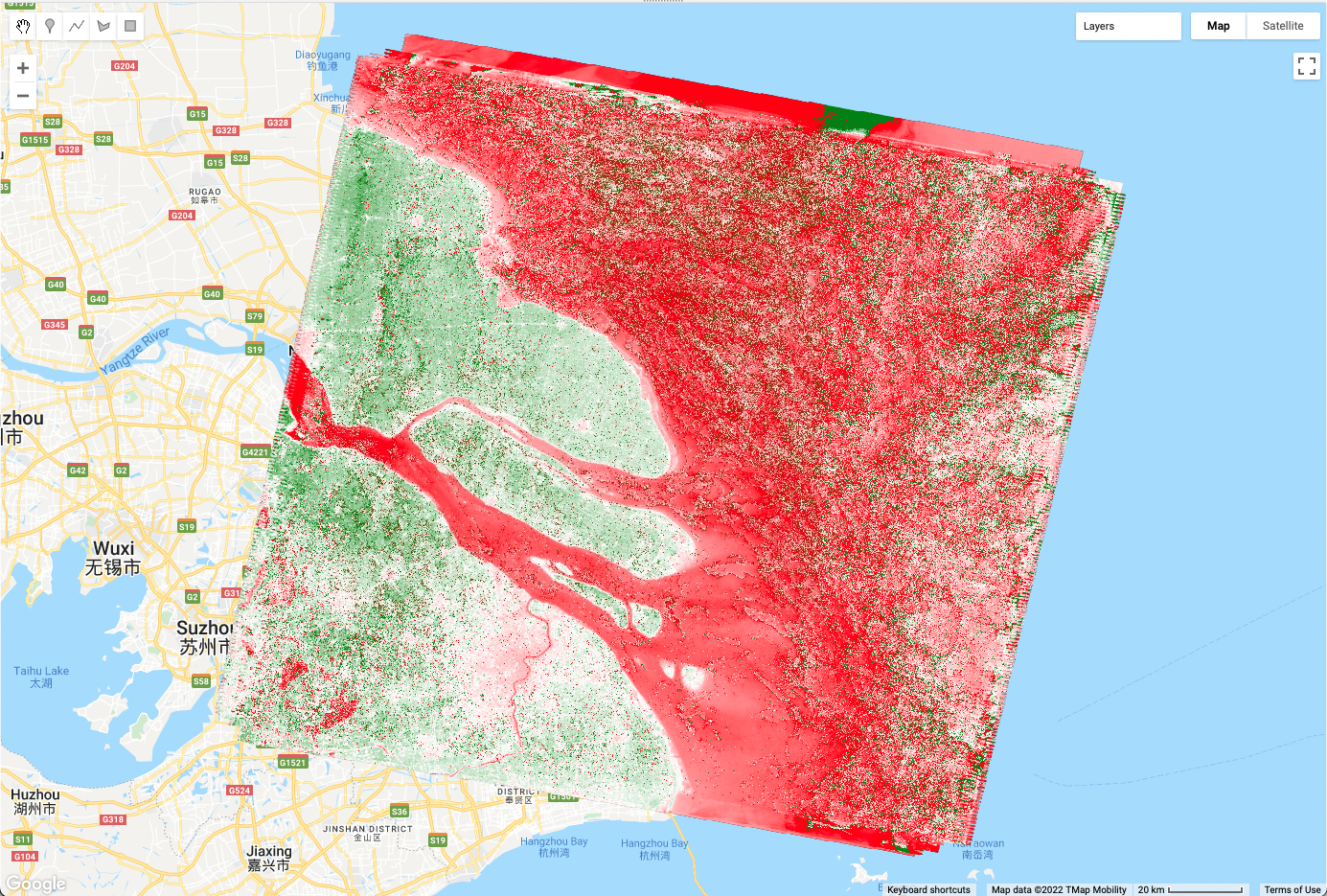

The code below computes the mean value, at every pixel, of the ImageCollection L5EVIimages created above. Add it at the bottom of your code.

var L5EVImean = L5EVIimages.reduce(ee.Reducer.mean());

print(L5EVImean);

Map.addLayer(L5EVImean, {

min: -1,

max: 2,

palette: ['red', 'white', 'green']

}, 'Mean EVI');Using the same principle, the code below computes and draws the median value of the ImageCollection in every pixel.

var L5EVImedian = L5EVIimages.reduce(ee.Reducer.median());

print(L5EVImedian);

Map.addLayer(L5EVImedian, {

min: -1,

max: 2,

palette: ['red', 'white', 'green']

}, 'Median EVI');

There are many more reducers that work with an ImageCollection to produce a wide range of summary statistics. Reducers are not limited to returning only one item from the reduction. The minMax reducer, for example, returns a two-band image for each band it is given, one for the minimum and one for the maximum.

The reducers described here treat each pixel independently. In subsequent chapters in Part F4, you will see other kinds of reducers—for example, ones that summarize the characteristics in the neighborhood surrounding each pixel.

Code Checkpoint F40c. The book’s repository contains a script that shows what your code should look like at this point.

Conclusion

In this chapter, you learned about the paradigm of filter, map, reduce. You learned how to use these tools to sift through, operate on, and summarize a large set of images to suit your purposes. Using the Filter functionality, you learned how to take a large ImageCollection and filter away images that do not meet your criteria, retaining only those images that match a given set of characteristics. Using the Map functionality, you learned how to apply a function to each image in an ImageCollection, treating each image one at a time and executing a requested set of operations on each. Using the Reduce functionality, you learned how to summarize the elements of an ImageCollection, extracting summary values of interest. In the subsequent chapters of Part 4, you will encounter these concepts repeatedly, manipulating image collections according to your project needs using the building blocks seen here. By building on what you have done in this chapter, you will grow in your ability to do sophisticated projects in Earth Engine.

Exploring Image Collections

Overview

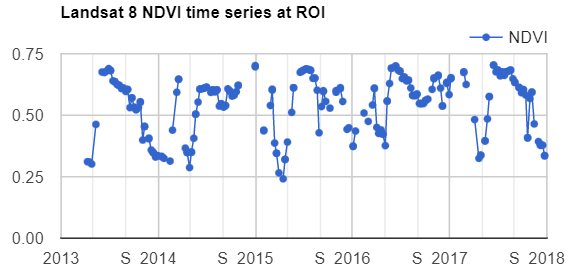

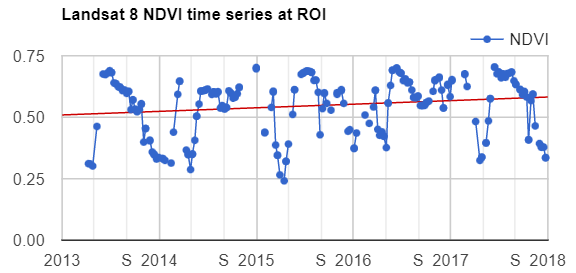

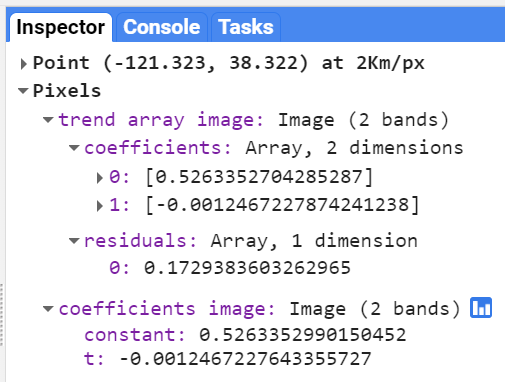

This chapter teaches how to explore image collections, including their spatiotemporal extent, resolution, and values stored in images and image properties. You will learn how to map and inspect image collections using maps, charts, and interactive tools, and how to compute different statistics of values stored in image collections using reducers.

Learning Outcomes

- Inspecting the spatiotemporal extent and resolution of image collections by mapping image geometry and plotting image time properties.

- Exploring properties of images stored in an ImageCollection by plotting charts and deriving statistics.

- Filtering image collections by using stored or computed image properties.

- Exploring the distribution of values stored in image pixels of an ImageCollection through percentile reducers.

Assumes you know how to:

- Import images and image collections, filter, and visualize (Part F1).

- Perform basic image analysis: select bands, compute indices, create masks (Part F2).

- Summarize an ImageCollection with reducers (Chap. F4.0).

In the previous chapter (Chap. F4.0), the filter, map, reduce paradigm was introduced. The main goal of this chapter is to demonstrate some of the ways that those concepts can be used within Earth Engine to better understand the variability of values stored in image collections. Sect. 1 demonstrates how time-dependent values stored in the images of an ImageCollection can be inspected using the Code Editor user interface after filtering them to a limited spatiotemporal range (i.e., geometry and time ranges). Sect. 2 shows how the extent of images, as well as basic statistics, such as the number of observations, can be visualized to better understand the spatiotemporal extent of image collections. Then, Sects. 3 and 4 demonstrate how simple reducers such as mean and median, and more advanced reducers such as percentiles, can be used to better understand how the values of a filtered ImageCollection are distributed.

Filtering and Inspecting an Image Collection

We will focus on the area in and surrounding Lisbon, Portugal. Below, we will define a point, lisbonPoint, located in the city; access the very large Landsat ImageCollection and limit it to the year 2020 and to the images that contain Lisbon; and select bands 6, 5, and 4 from each of the images in the resulting filtered ImageCollection.

// Define a region of interest as a point in Lisbon, Portugal.

var lisbonPoint = ee.Geometry.Point(-9.179473, 38.763948);

// Center the map at that point.

Map.centerObject(lisbonPoint, 16);

// filter the large ImageCollection to be just images from 2020

// around Lisbon. From each image, select true-color bands to draw

var filteredIC = ee.ImageCollection('LANDSAT/LC08/C02/T1_TOA')

.filterDate('2020-01-01', '2021-01-01')

.filterBounds(lisbonPoint)

.select(['B6', 'B5', 'B4']);

// Add the filtered ImageCollection so that we can inspect values

// via the Inspector tool

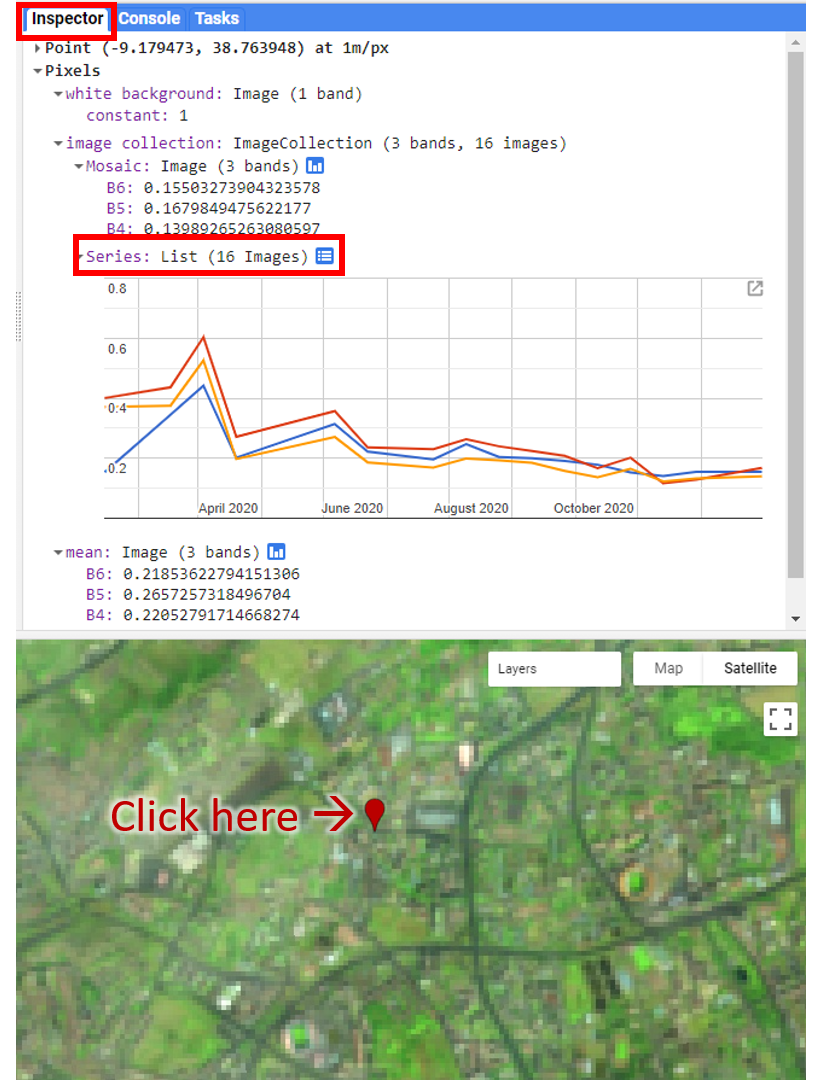

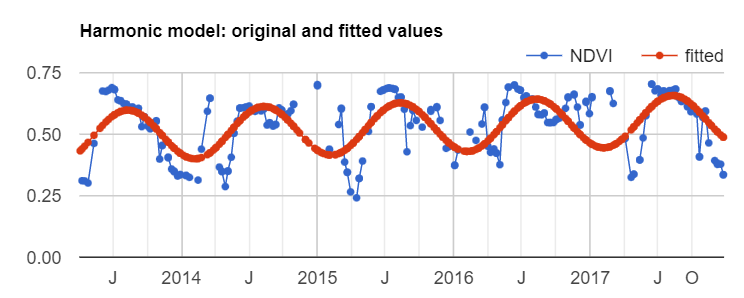

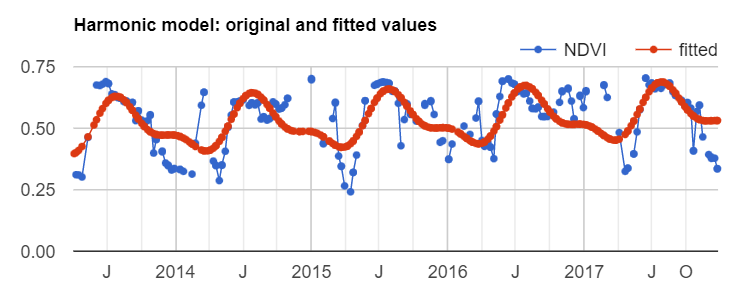

Map.addLayer(filteredIC, {}, 'TOA image collection');The three selected bands (which correspond to SWIR1, NIR, and Red) display a false-color image that accentuates differences between different land covers (e.g., concrete, vegetation) in Lisbon. With the Inspector tab highlighted (Fig. F4.1.1), clicking on a point will bring up the values of bands 6, 5, and 4 from each of the images. If you open the Series option, you’ll see the values through time. For the specified point and for all other points in Lisbon (since they are all enclosed in the same Landsat scene), there are 16 images gathered in 2020. By following one of the graphed lines (in blue, yellow, or red) with your finger, you should be able to count that many distinct values. Moving the mouse along the lines will show the specific values and the image dates.

We can also show this kind of chart automatically by making use of the ui.Chart function of the Earth Engine API. The following code snippet should result in the same chart as we could observe in the Inspector tab, assuming the same pixel is clicked.

// Construct a chart using values queried from image collection.

var chart = ui.Chart.image.series({

imageCollection: filteredIC,

region: lisbonPoint,

reducer: ee.Reducer.first(),

scale: 10

});

// Show the chart in the Console.

print(chart);Code Checkpoint F41a. The book’s repository contains a script that shows what your code should look like at this point.

How Many Images Are There, Everywhere on Earth?

Suppose we are interested to find out how many valid observations we have at every map pixel on Earth for a given ImageCollection. This enormously computationally demanding task is surprisingly easy to do in Earth Engine. The API provides a set of reducer functions to summarize values to a single number in each pixel, as described in Chap. F4.0. We can apply this reducer, count, to our filtered ImageCollection with the code below. We’ll return to the same data set and filter for 2020, but without the geographic limitation. This will assemble images from all over the world, and then count the number of images in each pixel. The following code does that count, and adds the resulting image to the map with a predefined red/yellow/green color palette stretched between values 0 and 50. Continue pasting the code below into the same script.

// compute and show the number of observations in an image collection

var count = ee.ImageCollection('LANDSAT/LC08/C02/T1_TOA')

.filterDate('2020-01-01', '2021-01-01')

.select(['B6'])

.count();

// add white background and switch to HYBRID basemap

Map.addLayer(ee.Image(1), {

palette: ['white']

}, 'white', true, 0.5);

Map.setOptions('HYBRID');

// show image count

Map.addLayer(count, {

min: 0,

max: 50,

palette: ['d7191c', 'fdae61', 'ffffbf', 'a6d96a', '1a9641']

}, 'landsat 8 image count (2020)');

// Center the map at that point.

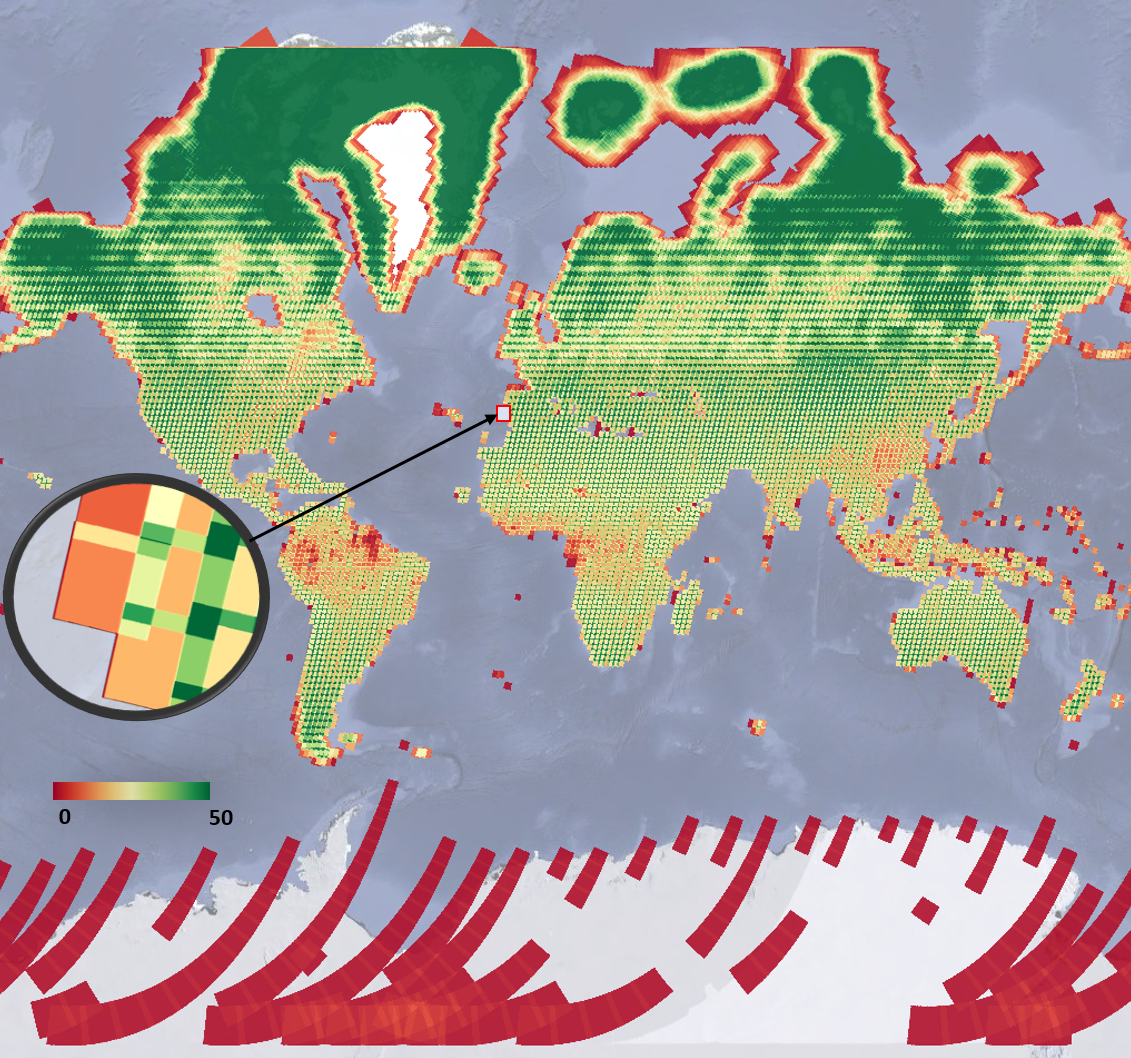

Map.centerObject(lisbonPoint, 5);Run the command and zoom out. If the count of images over the entire Earth is viewed, the resulting map should look like Fig. F4.1.2. The created map data may take a few minutes to fully load in.

Note the checkered pattern, somewhat reminiscent of a Mondrian painting. To understand why the image looks this way, it is useful to consider the overlapping image footprints. As Landsat passes over, each image is wide enough to produce substantial “sidelap” with the images from the adjacent paths, which are collected at different dates according to the satellite’s orbit schedule. In the north-south direction, there is also some overlap to ensure that there are no gaps in the data. Because these are served as distinct images and stored distinctly in Earth Engine, you will find that there can be two images from the same day with the same value for points in these overlap areas. Depending on the purposes of a study, you might find a way to ignore the duplicate pixel values during the analysis process.

You might have noticed that we summarized a single band from the original ImageCollection to ensure that the resulting image would give a single count in each pixel. The count reducer operates on every band passed to it. Since every image has the same number of bands, passing an ImageCollection of all seven Landsat bands to the count reducer would have returned seven identical values of 16 for every point. To limit any confusion from seeing the same number seven times, we selected one of the bands from each image in the collection. In your own work, you might want to use a different reducer, such as a median operation, that would give different, useful answers for each band. A few of these reducers are described below.

Code Checkpoint F41b. The book’s repository contains a script that shows what your code should look like at this point.

Reducing Image Collections to Understand Band Values

As we have seen, you could click at any point on Earth’s surface and see both the number of Landsat images recorded there in 2020 and the values of any image in any band through time. This is impressive and perhaps mind-bending, given the enormous amount of data in play. In this section and the next, we will explore two ways to summarize the numerical values of the bands—one straightforward way and one more complex but highly powerful way to see what information is contained in image collections.

First, we will make a new layer that represents the mean value of each band in every pixel across every image from 2020 for the filtered set, add this layer to the layer set, and explore again with the Inspector. The previous section’s count reducer was called directly using a sort of simple shorthand; that could be done similarly here by calling mean on the assembled bands. In this example, we will use the reducer to get the mean using the more general reduce call. Continue pasting the code below into the same script.

// Zoom to an informative scale for the code that follows.

Map.centerObject(lisbonPoint, 10);

// Add a mean composite image.

var meanFilteredIC = filteredIC.reduce(ee.Reducer.mean());

Map.addLayer(meanFilteredIC, {}, 'Mean values within image collection');Now, let’s look at the median value for each band among all the values gathered in 2020. Using the code below, calculate the median and explore the image with the Inspector. Compare this image briefly to the mean image by eye and by clicking in a few pixels in the Inspector. They should have different values, but in most places they will look very similar.

// Add a median composite image.

var medianFilteredIC = filteredIC.reduce(ee.Reducer.median());

Map.addLayer(medianFilteredIC, {}, 'Median values within image collection');There is a wide range of reducers available in Earth Engine. If you are curious about which reducers can be used to summarize band values across a collection of images, use the Docs tab in the Code Editor to list all reducers and look for those beginning with ee.Reducer.

Code Checkpoint F41c. The book’s repository contains a script that shows what your code should look like at this point.

Compute Multiple Percentile Images for an Image Collection

One particularly useful reducer that can help you better understand the variability of values in image collections is ee.Reducer.percentile. The nth percentile gives the value that is the nth largest in a set. In this context, you can imagine accessing all of the values for a given band in a given ImageCollection for a given pixel and sorting them. The 30th percentile, for example, is the value 30% of the way along the list from smallest to largest. This provides an easy way to explore the variability of the values in image collections by computing a cumulative density function of values on a per-pixel basis. The following code shows how we can calculate a single 30th percentile on a per-pixel and per-band basis for our Landsat 8 ImageCollection. Continue pasting the code below into the same script.

// compute a single 30% percentile

var p30 = filteredIC.reduce(ee.Reducer.percentile([30]));

Map.addLayer(p30, {

min: 0.05,

max: 0.35}, '30%');

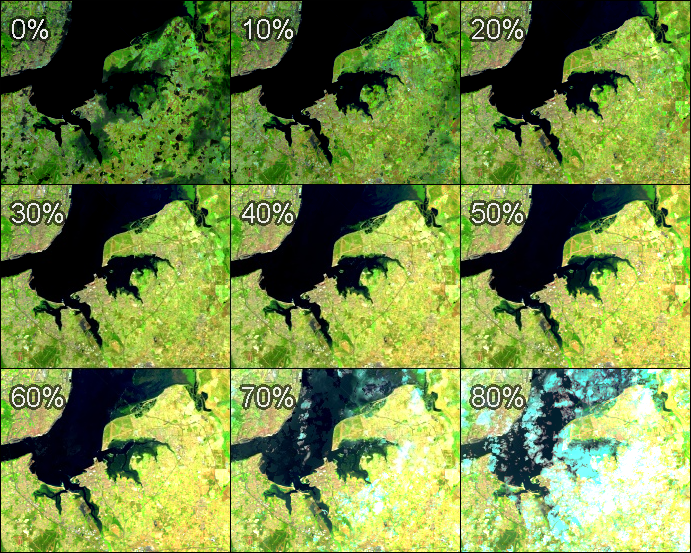

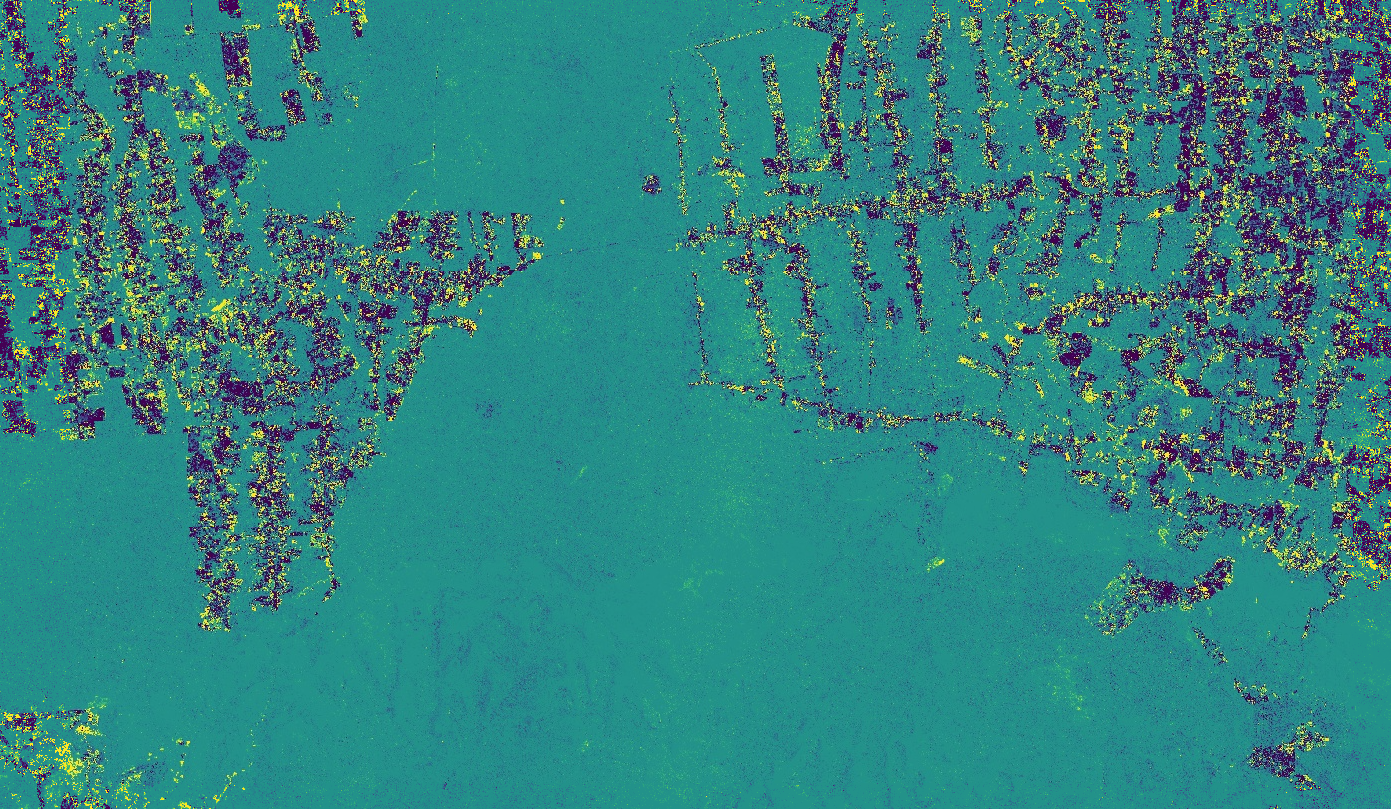

We can see that the resulting composite image (Fig. 4.1.3) has almost no cloudy pixels present for this area. This happens because cloudy pixels usually have higher reflectance values. At the lowest end of the values, other unwanted effects like cloud or hill shadows typically have very low reflectance values. This is why this 30th percentile composite image looks so much cleaner than the mean composite image (meanFilteredIC) calculated earlier. Note that the reducers operate per pixel: adjacent pixels are drawn from different images. This means that one pixel’s value could be taken from an image from one date, and the adjacent pixel’s value drawn from an entirely different period. Although, like the mean and median images, percentile images such as that seen in Fig. F4.1.3 never existed on a single day, composite images allow us to view Earth’s surface without the noise that can make analysis difficult.

We can explore the range of values in an entire ImageCollection by viewing a series of increasingly bright percentile images, as shown in Fig. F4.1.4. Paste and run the following code.

var percentiles = [0, 10, 20, 30, 40, 50, 60, 70, 80];

// let's compute percentile images and add them as separate layers

percentiles.map(function(p) { var image = filteredIC.reduce(ee.Reducer.percentile([p])); Map.addLayer(image, {

min: 0.05,

max: 0.35 }, p + '%');

});Note that the code adds every percentile image as a separate map layer, so you need to go to the Layers control and show/hide different layers to explore differences. Here, we can see that low-percentile composite images depict darker, low-reflectance land features, such as water and cloud or hill shadows, while higher-percentile composite images (>70% in our example) depict clouds and any other atmospheric or land effects corresponding to bright reflectance values.

Earth Engine provides a very rich API, allowing users to explore image collections to better understand the extent and variability of data in space, time, and across bands, as well as tools to analyze values stored in image collections in a frequency domain. Exploring these values in different forms should be the first step of any study before developing data analysis algorithms.

Code Checkpoint F41d. The book’s repository contains a script that shows what your code should look like at this point.

Conclusion

In this chapter, you have learned different ways to explore image collections using Earth Engine in addition to looking at individual images. You have learned that image collections in Earth Engine may have global footprints as well as images with a smaller, local footprint, and how to visualize the number of images in a given filtered ImageCollection. You have learned how to explore the temporal and spatial extent of images stored in image collections, and how to quickly examine the variability of values in these image collections by computing simple statistics like mean or median, as well as how to use a percentile reducer to better understand this variability.

References

Wilson AM, Jetz W (2016) Remotely sensed high-resolution global cloud dynamics for predicting ecosystem and biodiversity distributions. PLoS Biol 14:e1002415. https://doi.org/10.1371/journal.pbio.1002415

Aggregating Images for Time Series

Overview

Many remote sensing datasets consist of repeated observations over time. The interval between observations can vary widely. The Global Precipitation Measurement dataset, for example, produces observations of rain and snow worldwide every three hours. The Climate Hazards Group InfraRed Precipitation with Station (CHIRPS) project produces a gridded global dataset at the daily level and also for each five-day period. The Landsat 8 mission produces a new scene of each location on Earth every 16 days. With its constellation of two satellites, the Sentinel-2 mission images every location every five days.

Many applications, however, require computing aggregations of data at time intervals different from those at which the datasets were produced. For example, for determining rainfall anomalies, it is useful to compare monthly rainfall against a long-period monthly average.

While individual scenes are informative, many days are cloudy, and it is useful to build a robust cloud-free time series for many applications. Producing less cloudy or even cloud-free composites can be done by aggregating data to form monthly, seasonal, or yearly composites built from individual scenes. For example, if you are interested in detecting long-term changes in an urban landscape, creating yearly median composites can enable you to detect change patterns across long time intervals with less worry about day-to-day noise.

This chapter will cover the techniques for aggregating individual images from a time series at a chosen interval. We will take the CHIRPS time series of rainfall for one year and aggregate it to create a monthly rainfall time series.

Learning Outcomes

- Using the Earth Engine API to work with dates.

- Aggregating values from an ImageCollection to calculate monthly, seasonal, or yearly images.

- Plotting the aggregated time series at a given location.

Assumes you know how to:

- Import images and image collections, filter, and visualize (Part F1).

- Create a graph using ui.Chart (Chap. F1.3).

- Write a function and map it over an ImageCollection (Chap. F4.0).

- Summarize an ImageCollection with reducers (Chap. F4.0, Chap. F4.1).

- Inspect an Image and an ImageCollection, as well as their properties (Chap. F4.1).

Introduction

CHIRPS is a high-resolution global gridded rainfall dataset that combines satellite-measured precipitation with ground station data in a consistent, long time-series dataset. The data are provided by the University of California, Santa Barbara, and are available from 1981 to the present. This dataset is extremely useful in drought monitoring and assessing global environmental change over land. The satellite data are calibrated with ground station observations to create the final product.

In this exercise, we will work with the CHIRPS dataset using the pentad. A pentad represents the grouping of five days. There are six pentads in a calendar month, with five pentads of exactly five days each and one pentad with the remaining three to six days of the month. Pentads reset at the beginning of each month, and the first day of every month is the start of a new pentad. Values at a given pixel in the CHIRPS dataset represent the total precipitation in millimeters over the pentad.

Filtering an Image Collection

We will start by accessing the CHIRPS Pentad collection and filtering it to create a time series for a single year.

var chirps = ee.ImageCollection('UCSB-CHG/CHIRPS/PENTAD');

var startDate = '2019-01-01';

var endDate = '2020-01-01';

var yearFiltered = chirps.filter(ee.Filter.date(startDate, endDate));

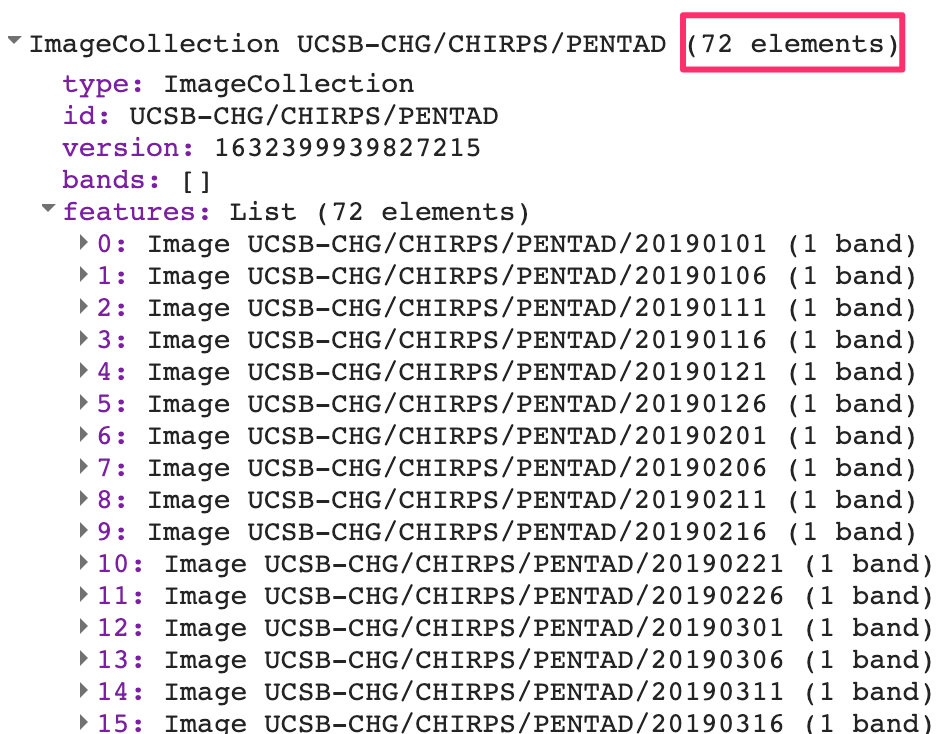

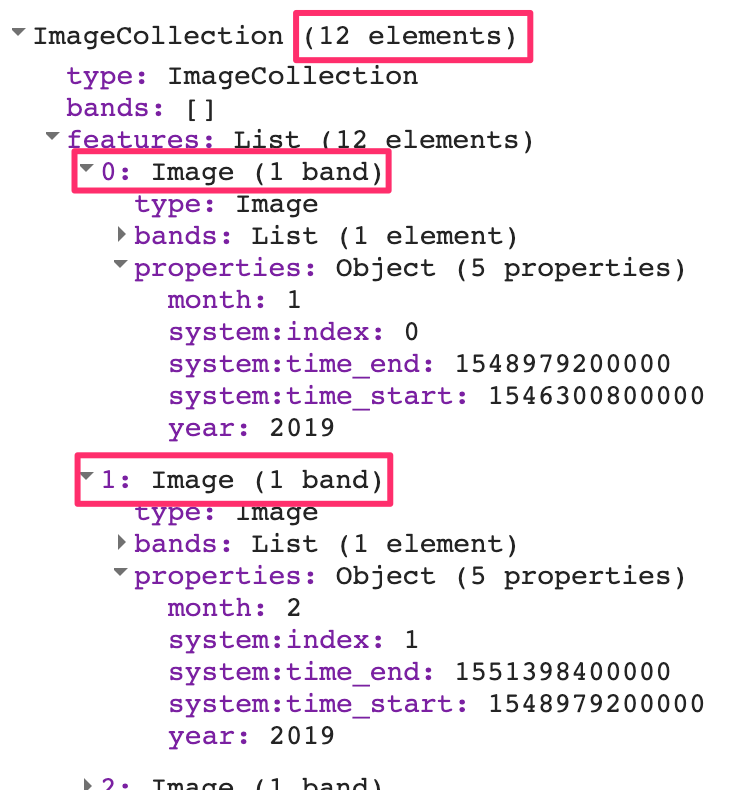

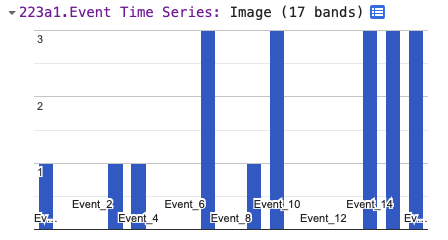

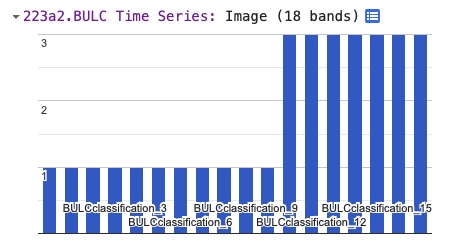

print(yearFiltered, 'Date-filtered CHIRPS images');The CHIRPS collection contains one image for every pentad. The filtered collection above is filtered to contain one year, which equates to 72 global images. If you expand the printed collection in the Console, you will be able to see the metadata for individual images; note that their date stamps indicate that they are spaced evenly every five days (Fig. F4.2.1).

Each image’s pixel values store the total precipitation during the pentad. Without aggregation to a period that matches other datasets, these layers are not very useful. For hydrological analysis, we typically need the total precipitation for each month or for a season. Let’s aggregate this collection so that we have 12 images—one image per month, with pixel values that represent the total precipitation for that month.

Code Checkpoint F42a. The book’s repository contains a script that shows what your code should look like at this point.

Working with Dates

To aggregate the time series, we need to learn how to create and manipulate dates programmatically. This section covers some functions from the ee.Date module that will be useful.

The Earth Engine API has a function called ee.Date.fromYMD that is designed to create a date object from year, month, and day values. The following code snippet shows how to define a variable containing the year value and create a date object from it. Paste the following code in a new script:

var chirps = ee.ImageCollection('UCSB-CHG/CHIRPS/PENTAD');

var year = 2019;

var startDate = ee.Date.fromYMD(year, 1, 1);Now, let’s determine how to create an end date in order to be able to specify a desired time interval. The preferred way to create a date relative to another date is using the advance function. It takes two parameters—a delta value and the unit of time—and returns a new date. The code below shows how to create a date one year in the future from a given date. Paste it into your script.

var endDate = startDate.advance(1, 'year');Next, paste the code below to perform filtering of the CHIRPS data using these calculated dates. After running it, check that you had accurately set the dates by looking for the dates of the images inside the printed result..

var yearFiltered = chirps

.filter(ee.Filter.date(startDate, endDate));

print(yearFiltered, 'Date-filtered CHIRPS images');Another date function that is very commonly used across Earth Engine is millis. This function takes a date object and returns the number of milliseconds since the arbitrary reference date of the start of the year 1970: 1970-01-01T00:00:00Z. This is known as the “Unix Timestamp”; it is a standard way to convert dates to numbers and allows for easy comparison between dates with high precision. Earth Engine objects store the timestamps for images and features in special properties called system:time_start and system:time_end. Both of these properties need to be supplied with a number instead of dates, and the millis function can help you do that. You can print the result of calling this function and check for yourself.

print(startDate, 'Start date');

print(endDate, 'End date');

print('Start date as timestamp', startDate.millis());

print('End date as timestamp', endDate.millis());We will use the millis function in the next section when we need to set the system:time_start and system:time_end properties of the aggregated images.

Code Checkpoint F42b. The book’s repository contains a script that shows what your code should look like at this point.

Aggregating Images

Now we can start aggregating the pentads into monthly sums. The process of aggregation has two fundamental steps. The first is to determine the beginning and ending dates of one time interval (in this case, one month), and the second is to sum up all of the values (in this case, the pentads) that fall within each interval. To begin, we can envision that the resulting series will contain 12 images. To prepare to create an image for each month, we create an ee.List of values from 1 to 12. We can use the ee.List.sequence function, as first presented in Chap. F1.0, to create the list of items of type ee.Number. Continuing with the script of the previous section, paste the following code:

// Aggregate this time series to compute monthly images.

// Create a list of months

var months = ee.List.sequence(1, 12);Next, we write a function that takes a single month as the input and returns an aggregated image for that month. Given beginningMonth as an input parameter, we first create a start and end date for that month based on the year and month variables. Then we filter the collection to find all images for that month. To create a monthly precipitation image, we apply ee.Reducer.sum to reduce the six pentad images for a month to a single image holding the summed value across the pentads. We also expressly set the timestamp properties system:time_start and system:time_end of the resulting summed image. We can also set year and month, which will help us filter the resulting collection later.

// Write a function that takes a month number

// and returns a monthly image.

var createMonthlyImage = function(beginningMonth) { var startDate = ee.Date.fromYMD(year, beginningMonth, 1); var endDate = startDate.advance(1, 'month'); var monthFiltered = yearFiltered

.filter(ee.Filter.date(startDate, endDate)); // Calculate total precipitation. var total = monthFiltered.reduce(ee.Reducer.sum()); return total.set({ 'system:time_start': startDate.millis(), 'system:time_end': endDate.millis(), 'year': year, 'month': beginningMonth });

};We now have an ee.List containing items of type ee.Number from 1 to 12, with a function that can compute a monthly aggregated image for each month number. All that is left to do is to map the function over the list. As described in Chaps. F4.0 and F4.1, the map function passes over each image in the list and runs createMonthlyImage. The function first receives the number “1” and executes, returning an image to Earth Engine. Then it runs on the number “2”, and so on for all 12 numbers. The result is a list of monthly images for each month of the year.

// map() the function on the list of months

// This creates a list with images for each month in the list

var monthlyImages = months.map(createMonthlyImage);We can create an ImageCollection from this ee.List of images using the ee.ImageCollection.fromImages function.

// Create an ee.ImageCollection.

var monthlyCollection = ee.ImageCollection.fromImages(monthlyImages);

print(monthlyCollection);We have now successfully computed an aggregated collection from the source ImageCollection by filtering, mapping, and reducing, as described in Chaps. F4.0 and F4.1. Expand the printed collection in the Console and you can verify that we now have 12 images in the newly created ImageCollection (Fig. F4.2.2).

Code Checkpoint F42c. The book’s repository contains a script that shows what your code should look like at this point.

Plotting Time Series

One useful application of gridded precipitation datasets is to analyze rainfall patterns. We can plot a time-series chart for a location using the newly computed time series. We can plot the pixel value at any given point or polygon. Here we create a point geometry for a given coordinate. Continuing with the script of the previous section, paste the following code:

// Create a point with coordinates for the city of Bengaluru, India.

var point = ee.Geometry.Point(77.5946, 12.9716);Earth Engine comes with a built-in ui.Chart.image.series function that can plot time series. In addition to the imageCollection and region parameters, we need to supply a scale value. The CHIRPS data catalog page indicates that the resolution of the data is 5566 meters, so we can use that as the scale. The resulting chart is printed in the Console.

var chart = ui.Chart.image.series({

imageCollection: monthlyCollection,

region: point,

reducer: ee.Reducer.mean(),

scale: 5566,

});

print(chart);We can make the chart more informative by adding axis labels and a title. The setOptions function allows us to customize the chart using parameters from Google Charts. To customize the chart, paste the code below at the bottom of your script. The effect will be to see two charts in the editor: one with the old view of the data, and one with the customized chart.

var chart = ui.Chart.image.series({

imageCollection: monthlyCollection,

region: point,

reducer: ee.Reducer.mean(),

scale: 5566

}).setOptions({

lineWidth: 1,

pointSize: 3,

title: 'Monthly Rainfall at Bengaluru',

vAxis: {

title: 'Rainfall (mm)' },

hAxis: {

title: 'Month',

gridlines: {

count: 12 }

}

});

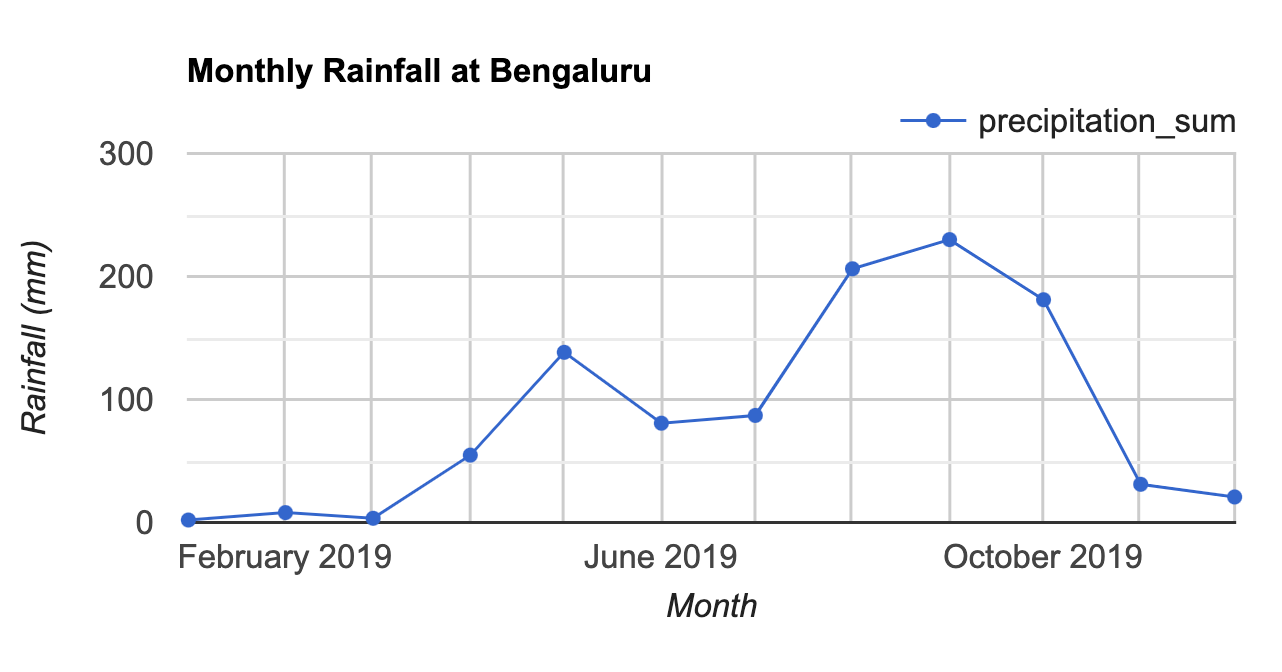

print(chart);The customized chart (Fig. F4.2.3) shows the typical rainfall pattern in the city of Bengaluru, India. Bengaluru has a temperate climate, with pre-monsoon rains in April and May cooling down the city and a moderate monsoon season lasting from June to September.

Code Checkpoint F42d. The book’s repository contains a script that shows what your code should look like at this point.

Conclusion

In this chapter, you learned how to aggregate a collection to months and plot the resulting time series for a location. This chapter also introduced useful functions for working with the dates that will be used across many different applications. You also learned how to iterate over a list using the map function. The technique of mapping a function over a list or collection is essential for processing data. Mastering this technique will allow you to scale your analysis using the parallel computing capabilities of Earth Engine.

References

Banerjee A, Chen R, Meadows ME, et al (2020) An analysis of long-term rainfall trends and variability in the Uttarakhand Himalaya using Google Earth Engine. Remote Sens 12:709. https://doi.org/10.3390/rs12040709

Funk C, Peterson P, Landsfeld M, et al (2015) The climate hazards infrared precipitation with stations – a new environmental record for monitoring extremes. Sci Data 2:1–21. https://doi.org/10.1038/sdata.2015.66

Okamoto K, Ushio T, Iguchi T, et al (2005) The global satellite mapping of precipitation (GSMaP) project. In: International Geoscience and Remote Sensing Symposium (IGARSS). pp 3414–3416

Clouds and Image Compositing

Overview

The purpose of this chapter is to provide necessary context and demonstrate different approaches for image composite generation when using data quality flags, using an initial example of removing cloud cover. We will examine different filtering options, demonstrate an approach for cloud masking, and provide additional opportunities for image composite development. Pixel selection for composite development can exclude unwanted pixels—such as those impacted by cloud, shadow, and smoke or haze—and can also preferentially select pixels based upon proximity to a target date or a preferred sensor type.

Learning Outcomes

- Understanding and applying satellite-specific cloud mask functions.

- Incorporating images from different sensors.

- Using focal functions to fill in data gaps.

Assumes you know how to:

- Import images and image collections, filter, and visualize (Part F1).

- Perform basic image analysis: select bands, compute indices, create masks (Part F2).

- Use band scaling factors (Chap. F3.1).

- Perform pixel-based transformations (Chap. F3.1).

- Use neighborhood-based image transformations (Chap. F3.2).

- Write a function and map it over an ImageCollection (Chap. F4.0).

- Summarize an ImageCollection with reducers (Chap. F4.0, Chap. F4.1).

Introduction

In many respects, satellite remote sensing is an ideal source of data for monitoring large or remote regions. However, cloud cover is one of the most common limitations of optical sensors in providing continuous time series of data for surface mapping and monitoring. This is particularly relevant in tropical, polar, mountainous, and high-latitude areas, where clouds are often present. Many studies have addressed the extent to which cloudiness can restrict the monitoring of various regions (Zhu and Woodcock 2012, 2014; Eberhardt et al. 2016; Martins et al. 2018).

Clouds and cloud shadows reduce the view of optical sensors and completely block or obscure the spectral response from Earth’s surface (Cao et al. 2020). Working with pixels that are cloud-contaminated can significantly influence the accuracy and information content of products derived from a variety of remote sensing activities, including land cover classification, vegetation modeling, and especially change detection, where unscreened clouds might be mapped as false changes (Braaten et al. 2015, Zhu et al. 2015). Thus, the information provided by cloud detection algorithms is critical to exclude clouds and cloud shadows from subsequent processing steps.

Historically, cloud detection algorithms derived the cloud information by considering a single date-image and sun illumination geometry (Irish et al. 2006, Huang et al. 2010). In contrast, current, more accurate cloud detection algorithms are based on the analysis of Landsat time series (Zhu and Woodcock 2014, Zhu and Helmer 2018). Cloud detection algorithms inform on the presence of clouds, cloud shadows, and other atmospheric conditions (e.g., presence of snow). The presence and extent of cloud contamination within a pixel is currently provided with Landsat and Sentinel-2 imagery as ancillary data via quality flags at the pixel level. Additionally, quality flags also inform on other acquisition-related conditions, including radiometric saturation and terrain occlusion, which enables us to assess the usefulness and convenience of inclusion of each pixel in subsequent analyses. The quality flags are ideally suited to reduce users’ manual supervision and maximize the automatic processing approaches.

Most automated algorithms (for classification or change detection, for example) work best on images free of clouds and cloud shadows, that cover the full area without spatial or spectral inconsistencies. Thus, the image representation over the study area should be seamless, containing as few data gaps as possible. Image compositing techniques are primarily used to reduce the impact of clouds and cloud shadows, as well as aerosol contamination, view angle effects, and data volumes (White et al. 2014). Compositing approaches typically rely on the outputs of cloud detection algorithms and quality flags to include or exclude pixels from the resulting composite products (Roy et al. 2010). Epochal image composites help overcome the limited availability of cloud-free imagery in some areas, and are constructed by considering the pixels from all images acquired in a given period (e.g., season, year).

The information provided by the cloud masks and pixel flags guides the establishment of rules to rank the quality of the pixels based on the presence of and distance to clouds, cloud shadows, or atmospheric haze (Griffiths et al. 2010). Higher scores are assigned to pixels with more desirable conditions, based on the presence of clouds and also other acquisition circumstances, such as acquisition date or sensor. Those pixels with the highest scores are included in the subsequent composite development. Image compositing approaches enable users to define the rules that are most appropriate for their particular information needs and study area to generate imagery covering large areas instead of being limited to the analysis of single scenes (Hermosilla et al. 2015, Loveland and Dwyer 2012). Moreover, generating image composites at regular intervals (e.g., annually) allows for the analysis of long temporal series over large areas, fulfilling a critical information need for monitoring programs.

The general workflow to generate a cloud-free composite involves:

- Defining your area of interest (AOI).

- Filtering (ee.Filter) the satellite ImageCollection to desired parameters.

- Applying a cloud mask.

- Reducing (ee.Reducer) the collection to generate a composite.

- Using the GEE-BAP application to generate annual best-available-pixel image composites by globally combining multiple Landsat sensors and images.

Additional steps may be necessary to improve the composite generated. These steps will be explained in the following sections.

Cloud Filter and Cloud Mask

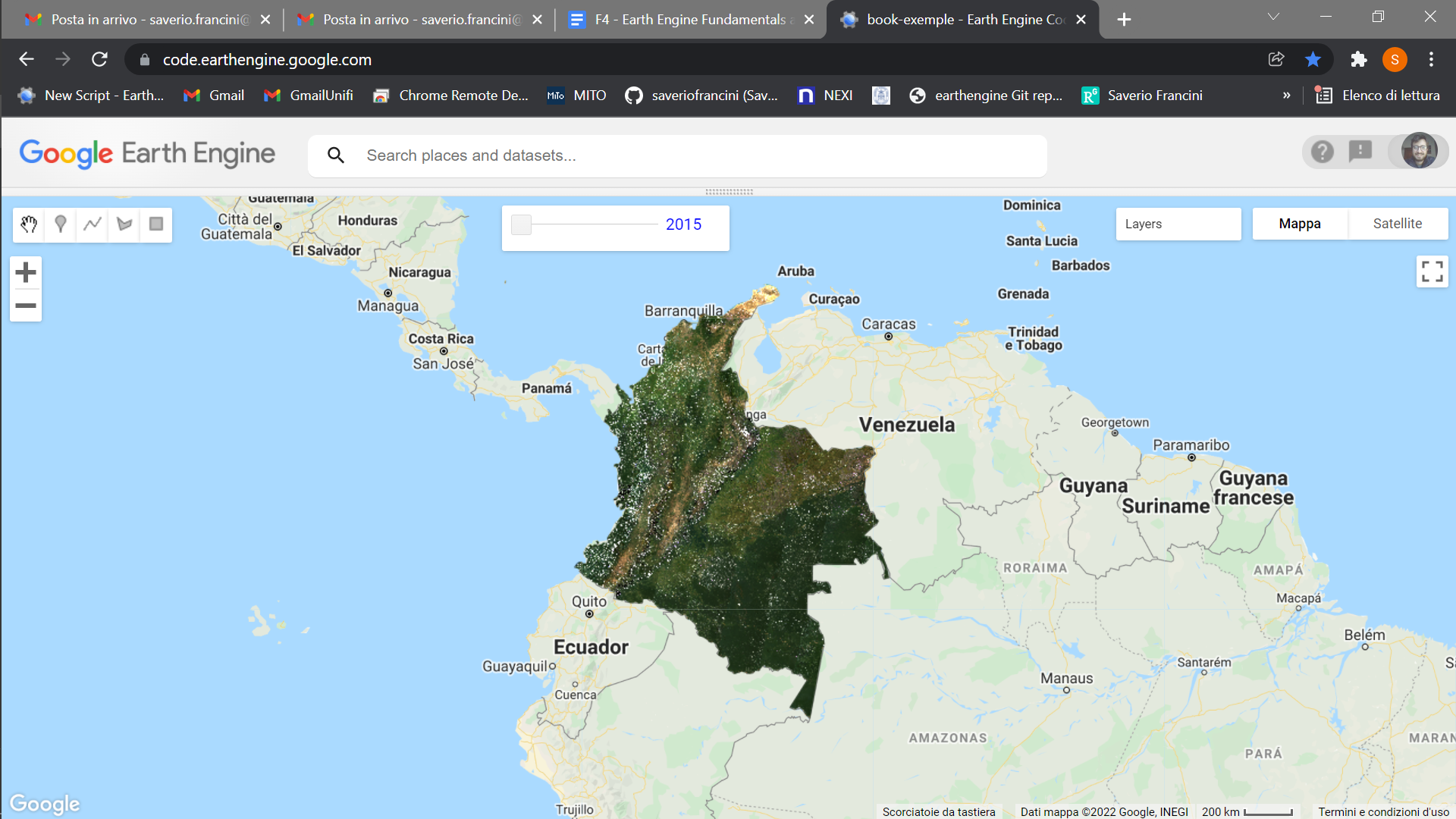

The first step is to define your AOI and center the map. The goal is to create a nationwide composite for the country of Colombia. We will use the Large Scale International Boundary (2017) simplified dataset from the US Department of State (USDOS), which contains polygons for all countries of the world.

// ---------- Section 1 -----------------

// Define the AOI.

var country = ee.FeatureCollection('USDOS/LSIB_SIMPLE/2017')

.filter(ee.Filter.equals('country_na', 'Colombia'));

// Center the Map. The second parameter is zoom level.

Map.centerObject(country, 5);We will start creating a composite from the Landsat 8 collection. First, we define two time variables: startDate and endDate. Here, we will create a composite for the year 2019. Then, we will define a collection for the Landsat 8 Level 2, Collection 2, Tier 1 variable and filter it to our AOI and time period. We define and use a function to apply scaling factors to the Landsat 8 Collection 2 data.

// Define time variables.

var startDate = '2019-01-01';

var endDate = '2019-12-31';

// Load and filter the Landsat 8 collection.

var landsat8 = ee.ImageCollection('LANDSAT/LC08/C02/T1_L2')

.filterBounds(country)

.filterDate(startDate, endDate);

// Apply scaling factors.

function applyScaleFactors(image) { var opticalBands = image.select('SR_B.').multiply(0.0000275).add(- 0.2); var thermalBands = image.select('ST_B.*').multiply(0.00341802)

.add(149.0); return image.addBands(opticalBands, null, true)

.addBands(thermalBands, null, true);

}

landsat8 = landsat8.map(applyScaleFactors);Now, we can create a composite. We will use the median function, which has the same effect as writing reduce(ee.Reducer.median()) as seen in Chap. F4.0, to reduce our ImageCollection to a median composite. Add the resulting composite to the map using visualization parameters.

// Create composite.

var composite = landsat8.median().clip(country);

var visParams = {

bands: ['SR_B4', 'SR_B3', 'SR_B2'],

min: 0,

max: 0.2

};

Map.addLayer(composite, visParams, 'L8 Composite');

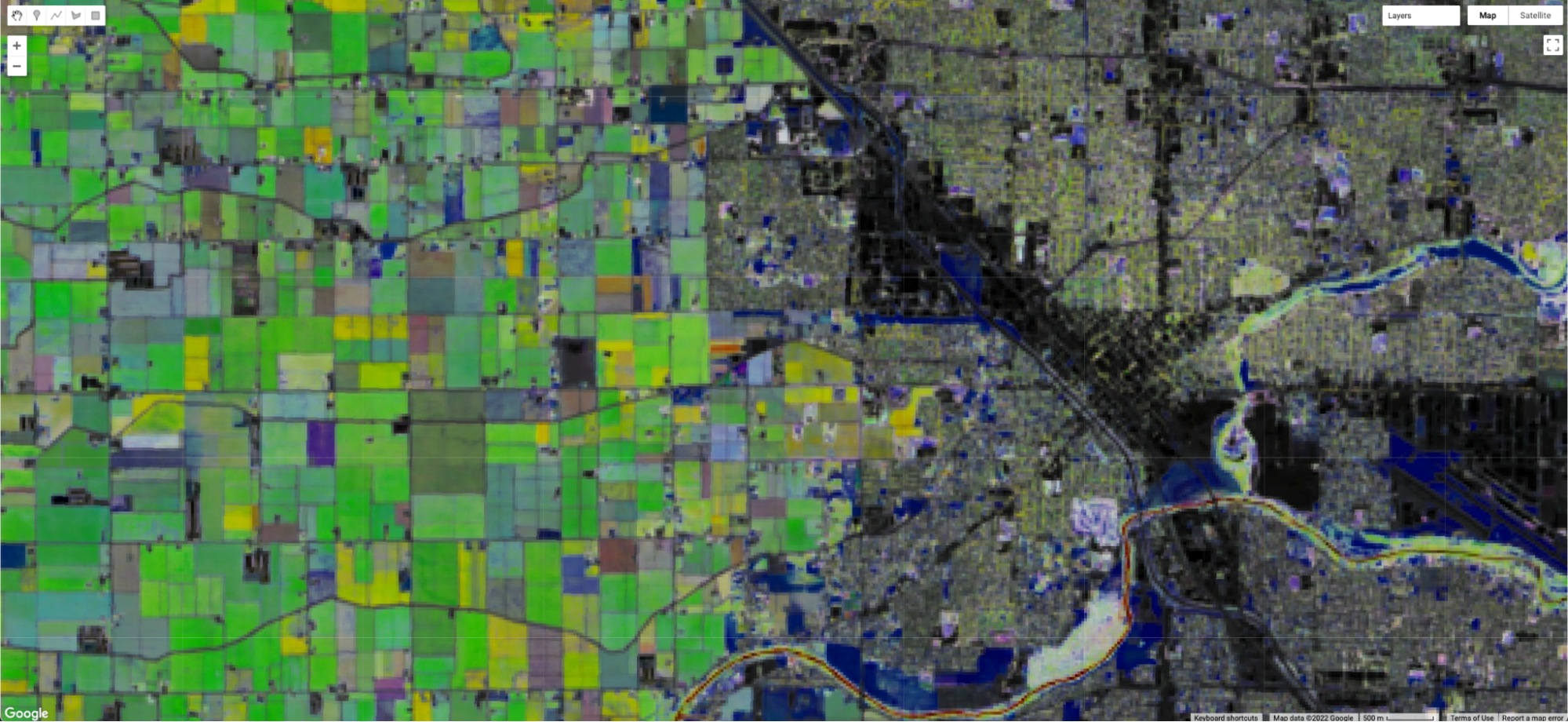

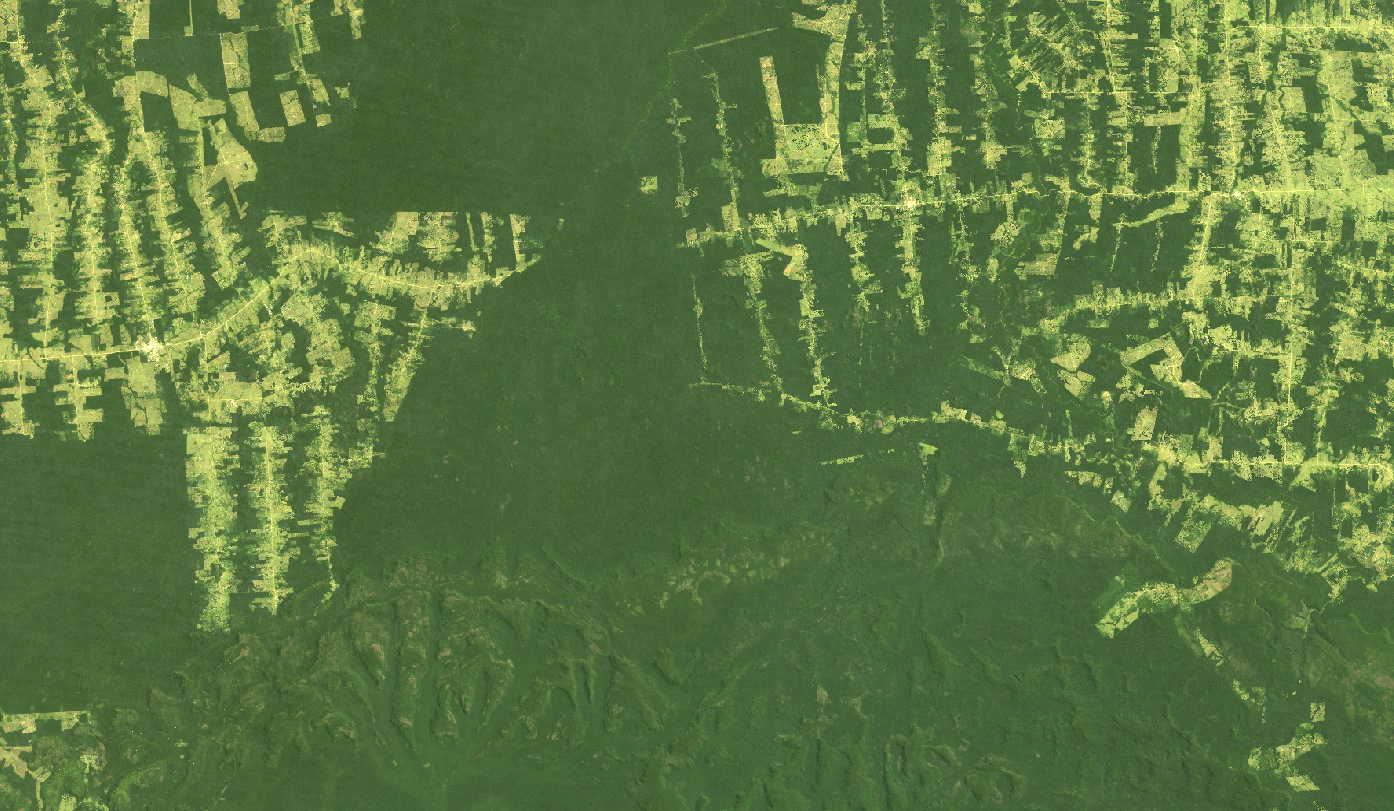

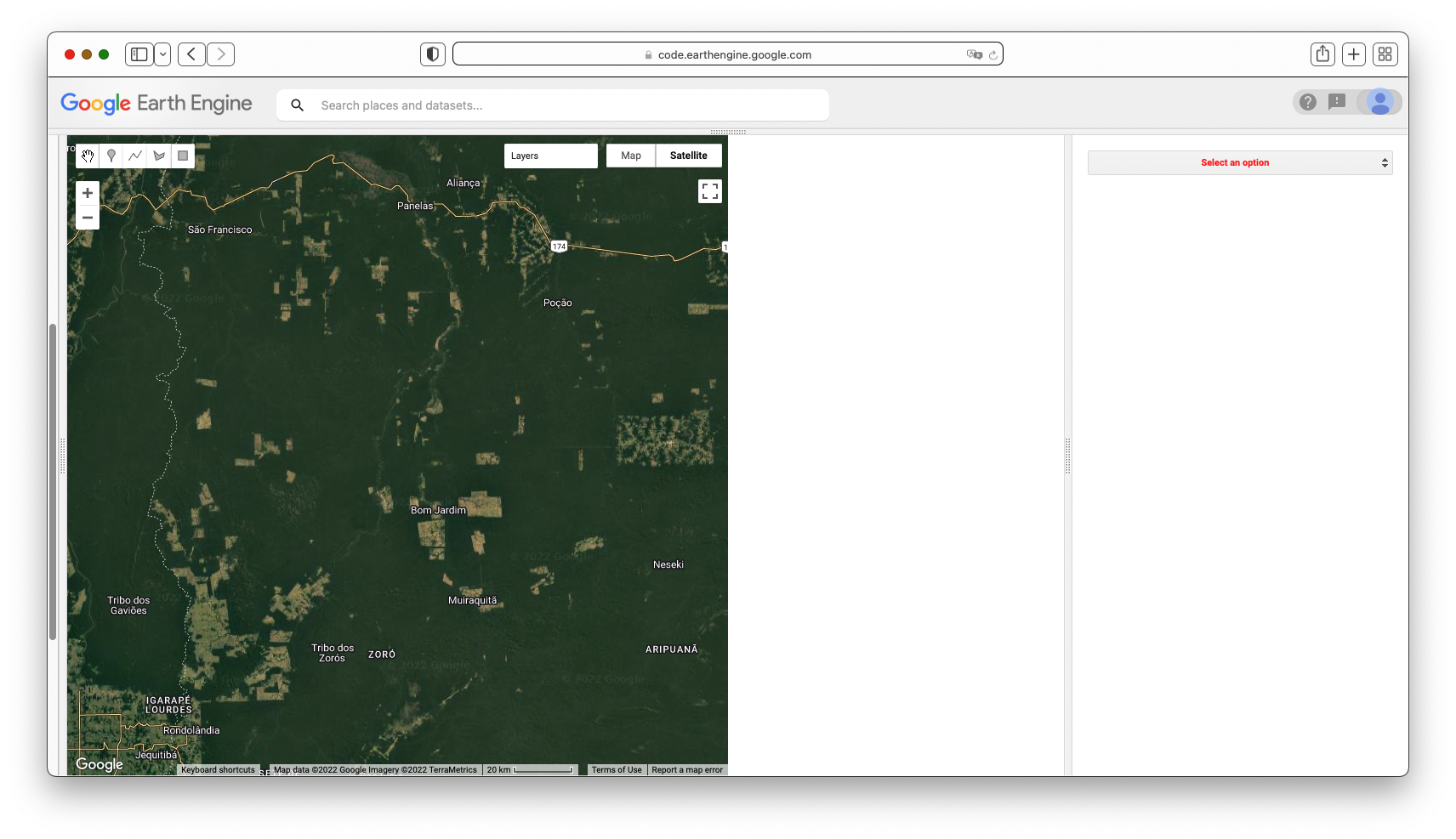

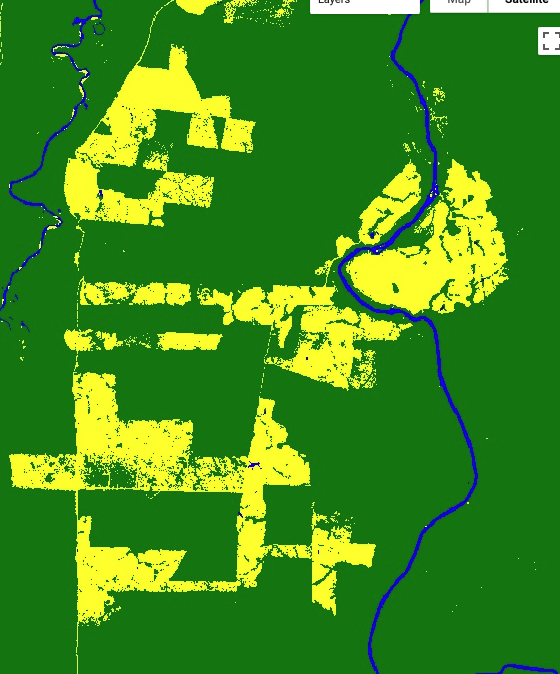

The resulting composite (Fig. F4.3.1) has lots of clouds, especially in the western, mountainous regions of Colombia. In tropical regions, it is very challenging to generate a high-quality, cloud-free composite without first filtering images for cloud cover, even if our collection is constrained to only include images acquired during the dry season. Therefore, let’s filter our collection by the CLOUD_COVER parameter to avoid cloudy images. We will start with images that have less than 50% cloud cover.

// Filter by the CLOUD_COVER property.

var landsat8FiltClouds = landsat8

.filterBounds(country)

.filterDate(startDate, endDate)

.filter(ee.Filter.lessThan('CLOUD_COVER', 50));

// Create a composite from the filtered imagery.

var compositeFiltClouds = landsat8FiltClouds.median().clip(country);

Map.addLayer(compositeFiltClouds, visParams, 'L8 Composite cloud filter');

// Print size of collections, for comparison.

print('Size landsat8 collection', landsat8.size());

print('Size landsat8FiltClouds collection', landsat8FiltClouds.size());

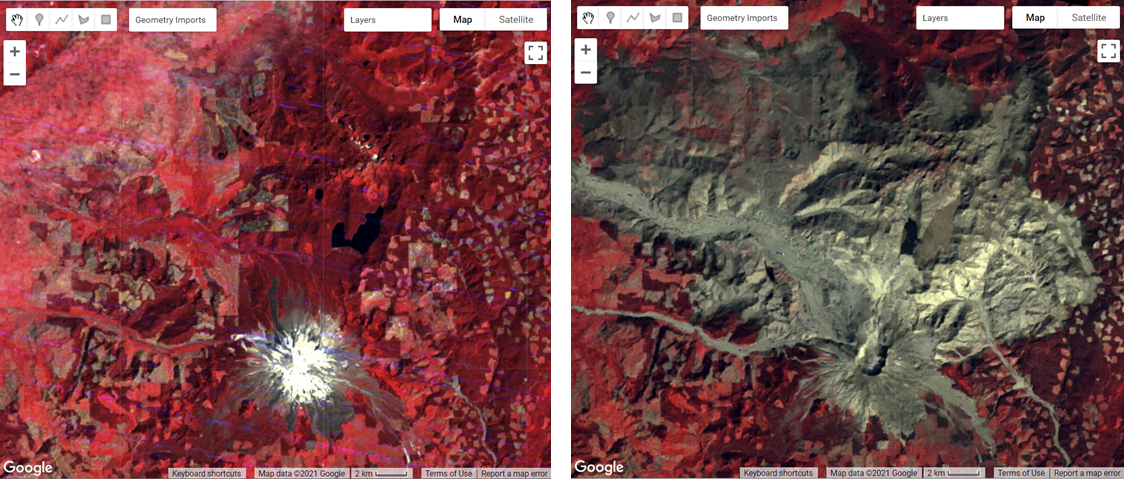

This new composite (Fig. F4.3.2) looks slightly better than the previous one, but still very cloudy. Remember to turn off the first layer or adjust the transparency to visualize only this new composite. The code prints the size of these collections, using the size function) to see how many images were left out after we applied the cloud cover threshold. (There are 1201 images in the landsat8 collection, compared to 493 in the landsat8FiltClouds collection—a lot of scenes with cloud cover greater than or equal to 50%.)

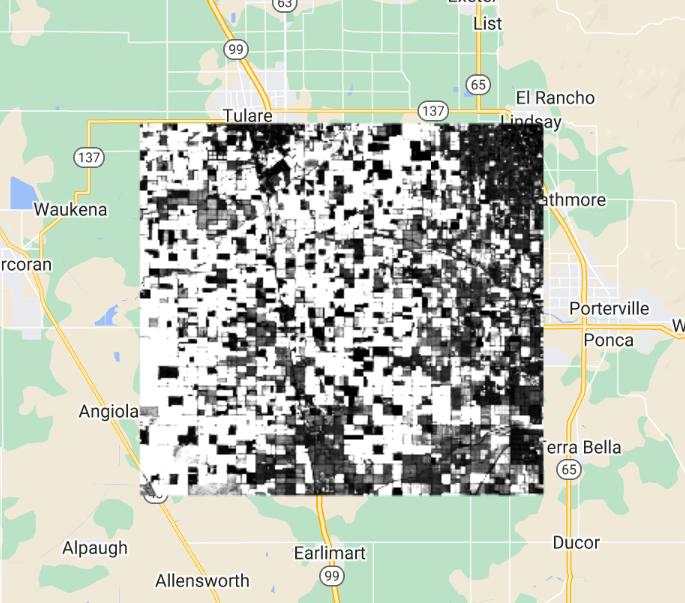

Try adjusting the CLOUD_COVER threshold in the landsat8FiltClouds variable to different percentages and checking the results. For example, with 20% set as the threshold (Fig. F4.3.3), you can see that many parts of the country have image gaps. (Remember to turn off the first layer or adjust its transparency; you can also set the shown parameter in the Map.addLayer function to false so the layer does not automatically load). So there is a trade-off between a stricter cloud cover threshold and data availability. Additionally, even with a cloud filter, some tiles still present a large area cover of clouds.

This is due to persistent cloud cover in some regions of Colombia. However, a cloud mask can be applied to improve the results. The Landsat 8 Collection 2 contains a quality assessment (QA) band called QA_PIXEL that provides useful information on certain conditions within the data, and allows users to apply per-pixel filters. Each pixel in the QA band contains unsigned integers that represent bit-packed combinations of surface, atmospheric, and sensor conditions.

We will also make use of the QA_RADSAT band, which indicates which bands are radiometrically saturated. A pixel value of 1 means saturated, so we will be masking these pixels.

As described in Chap. F4.0, we will create a function to apply a cloud mask to an image, and then map this function over our collection. The mask is applied by using the updateMask function. This function “eliminates” undesired pixels from the analysis, i.e., makes them transparent, by taking the mask as the input. You will see that this cloud mask function (or similar versions) is used in other chapters of the book. Note: Remember to set the cloud cover threshold back to 50 in the landsat8FiltClouds variable.

// Define the cloud mask function.

function maskSrClouds(image) { // Bit 0 - Fill // Bit 1 - Dilated Cloud // Bit 2 - Cirrus // Bit 3 - Cloud // Bit 4 - Cloud Shadow var qaMask = image.select('QA_PIXEL').bitwiseAnd(parseInt('11111', 2)).eq(0); var saturationMask = image.select('QA_RADSAT').eq(0); return image.updateMask(qaMask)

.updateMask(saturationMask);

}

// Apply the cloud mask to the collection.

var landsat8FiltMasked = landsat8FiltClouds.map(maskSrClouds);

// Create a composite.

var landsat8compositeMasked = landsat8FiltMasked.median().clip(country);

Map.addLayer(landsat8compositeMasked, visParams, 'L8 composite masked');

Because we are dealing with bits, in the maskSrClouds function we utilized the bitwiseAnd and parseInt functions. These are functions that serve the purpose of unpacking the bit information. A bitwise AND is a binary operation that takes two equal-length binary representations and performs the logical AND operation on each pair of corresponding bits. Thus, if both bits in the compared positions have the value 1, the bit in the resulting binary representation is 1 (1 × 1 = 1); otherwise, the result is 0 (1 × 0 = 0 and 0 × 0 = 0). The parseInt function parses a string argument (in our case, five-character string ‘11111’) and returns an integer of the specified numbering system, base 2.

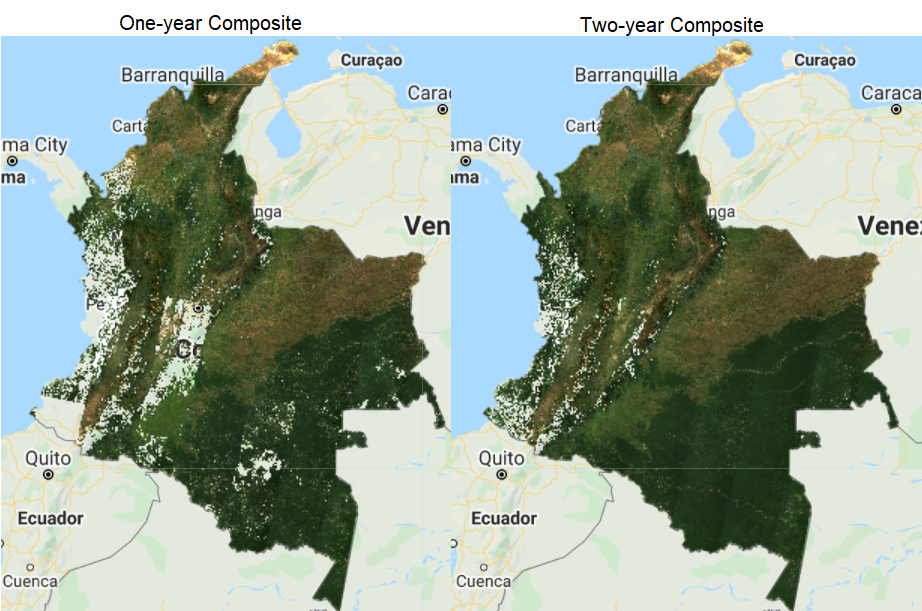

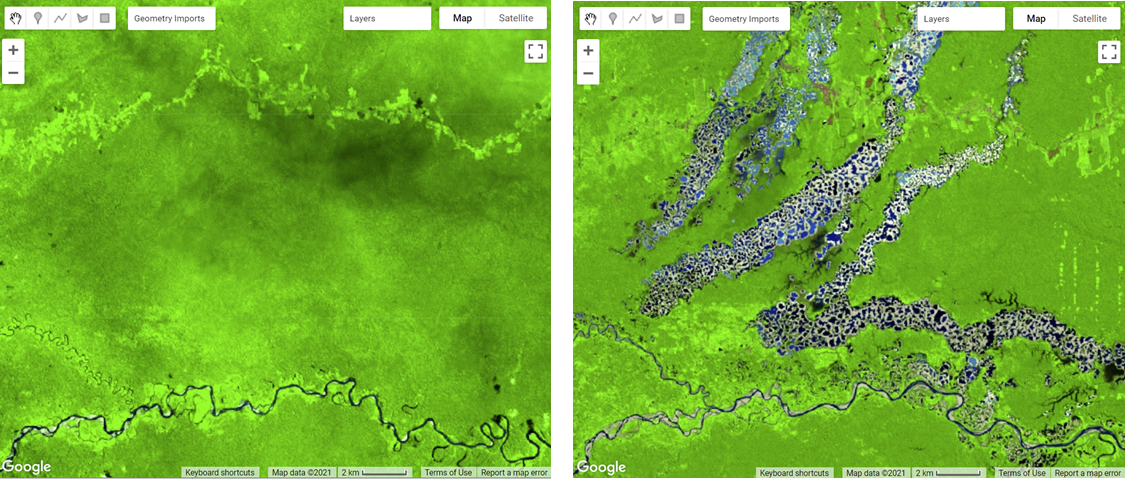

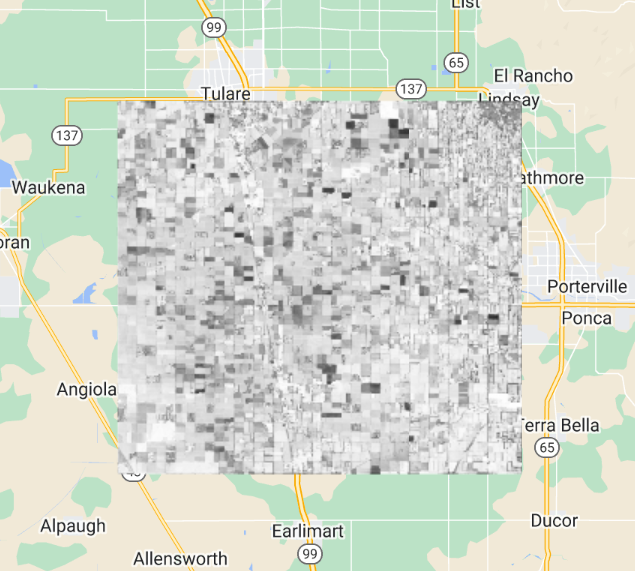

The resulting composite (Fig. F4.3.4) shows masked clouds, and is more spatially exhaustive in coverage compared to previous composites (don’t forget to uncheck the previous layers). This is because, when compositing all the images into one, we are not taking cloudy pixels into account anymore; therefore, the resulting pixel is not cloud covered but an actual representation of the landscape. However, data gaps are still an issue due to cloud cover. If you do not specifically need an annual composite, a first approach is to create a two-year composite to try to mitigate the missing data issue, or to have a series of rules that allows for selecting pixels for that particular year (as in Sect. 3 below). Change the startDate variable to 2018-01-01 to include all images from 2018 and 2019 in the collection. How does the cloud-masked composite (Fig. F4.3.5) compare to the 2019 one?

The resulting image has substantially fewer data gaps (you can zoom in to better see them). Again, if the time period is not a constraint for the creation of your composite, you can incorporate more images from a third year, and so on.

Code Checkpoint F43a. The book’s repository contains a script that shows what your code should look like at this point.

Incorporating Data from Other Satellites

Another option to reduce the presence of data gaps in cloudy situations is to bring in imagery from other sensors acquired during the time period of interest. The Landsat collection spans multiple missions, which have continuously acquired uninterrupted data since 1972 at different acquisition dates. Next, we will try incorporating Landsat 7 Level 2, Collection 2, Tier 1 images from 2019 to fill the gaps in the 2019 Landsat 8 composite.

To generate a Landsat 7 composite, we apply similar steps to the ones we did for Landsat 8, so keep adding code to the same script from Sect. 1. First, define your Landsat 7 collection variable and the scaling function. Then, filter the collection, apply the cloud mask (since we know Colombia has persistent cloud cover), and apply the scaling function. Note that we will use the same cloud mask function defined above, since the bits information for Landsat 7 is the same as for Landsat 8. Finally, create the median composite. After pasting in the code below but before executing it, change the startDate variable back to 2019-01-01 in order to create a one-year composite of 2019.

// ---------- Section 2 -----------------

// Define Landsat 7 Level 2, Collection 2, Tier 1 collection.

var landsat7 = ee.ImageCollection('LANDSAT/LE07/C02/T1_L2');

// Scaling factors for L7.

function applyScaleFactorsL7(image) { var opticalBands = image.select('SR_B.').multiply(0.0000275).add(- 0.2); var thermalBand = image.select('ST_B6').multiply(0.00341802).add( 149.0); return image.addBands(opticalBands, null, true)

.addBands(thermalBand, null, true);

}

// Filter collection, apply cloud mask, and scaling factors.

var landsat7FiltMasked = landsat7

.filterBounds(country)

.filterDate(startDate, endDate)

.filter(ee.Filter.lessThan('CLOUD_COVER', 50))

.map(maskSrClouds)

.map(applyScaleFactorsL7);

// Create composite.

var landsat7compositeMasked = landsat7FiltMasked

.median()

.clip(country);

Map.addLayer(landsat7compositeMasked,

{

bands: ['SR_B3', 'SR_B2', 'SR_B1'],

min: 0,

max: 0.2 }, 'L7 composite masked');

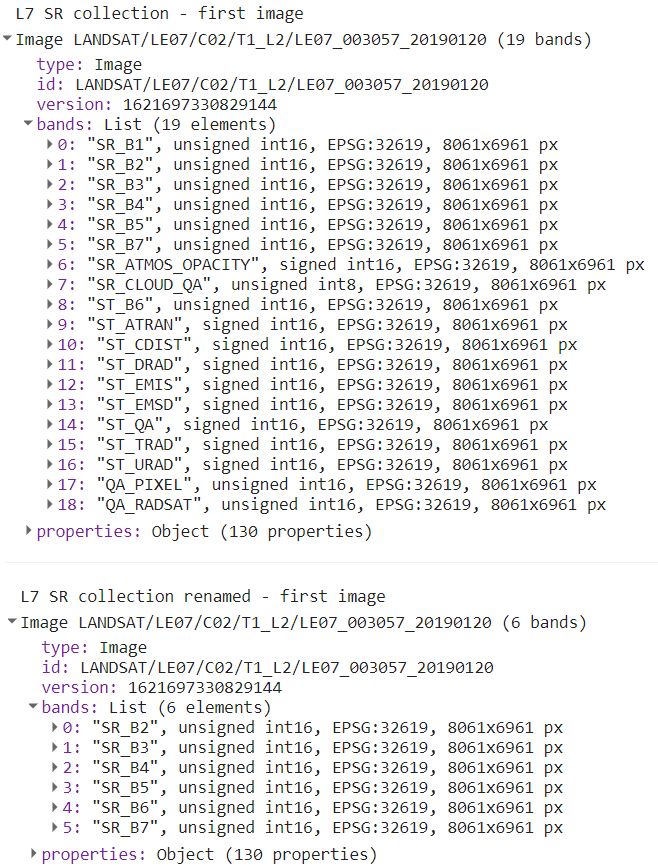

Note that we used bands: [‘SR_B3’, ‘SR_B2’, ‘SR_B1’] to visualize the composite because Landsat 7 has different band designations. The sensors aboard each of the Landsat satellites were designed to acquire data in different ranges of frequencies along the electromagnetic spectrum. Whereas for Landsat 8, the red, green, and blue bands are B4, B3, and B2, respectively, for Landsat 7, these same bands are B3, B2, and B1, respectively.

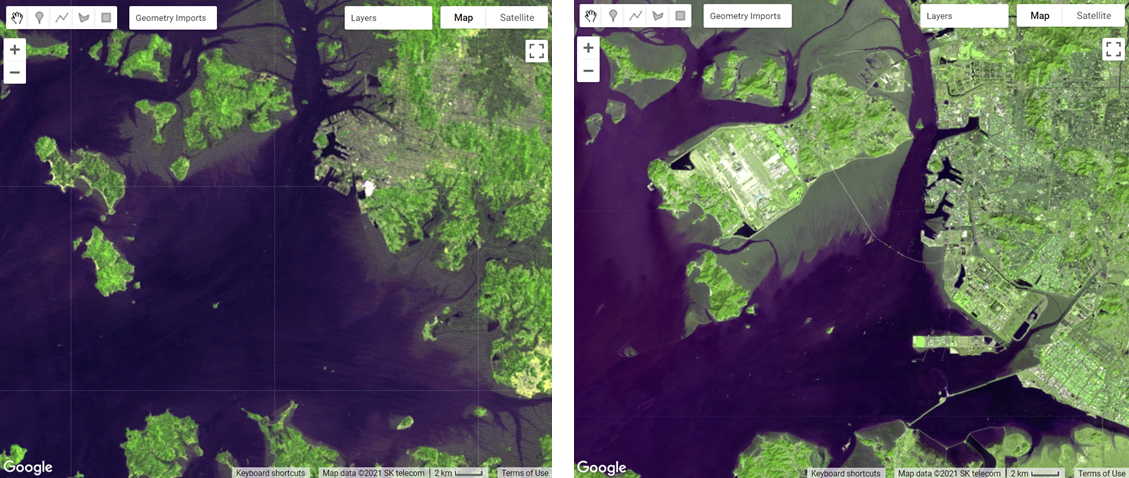

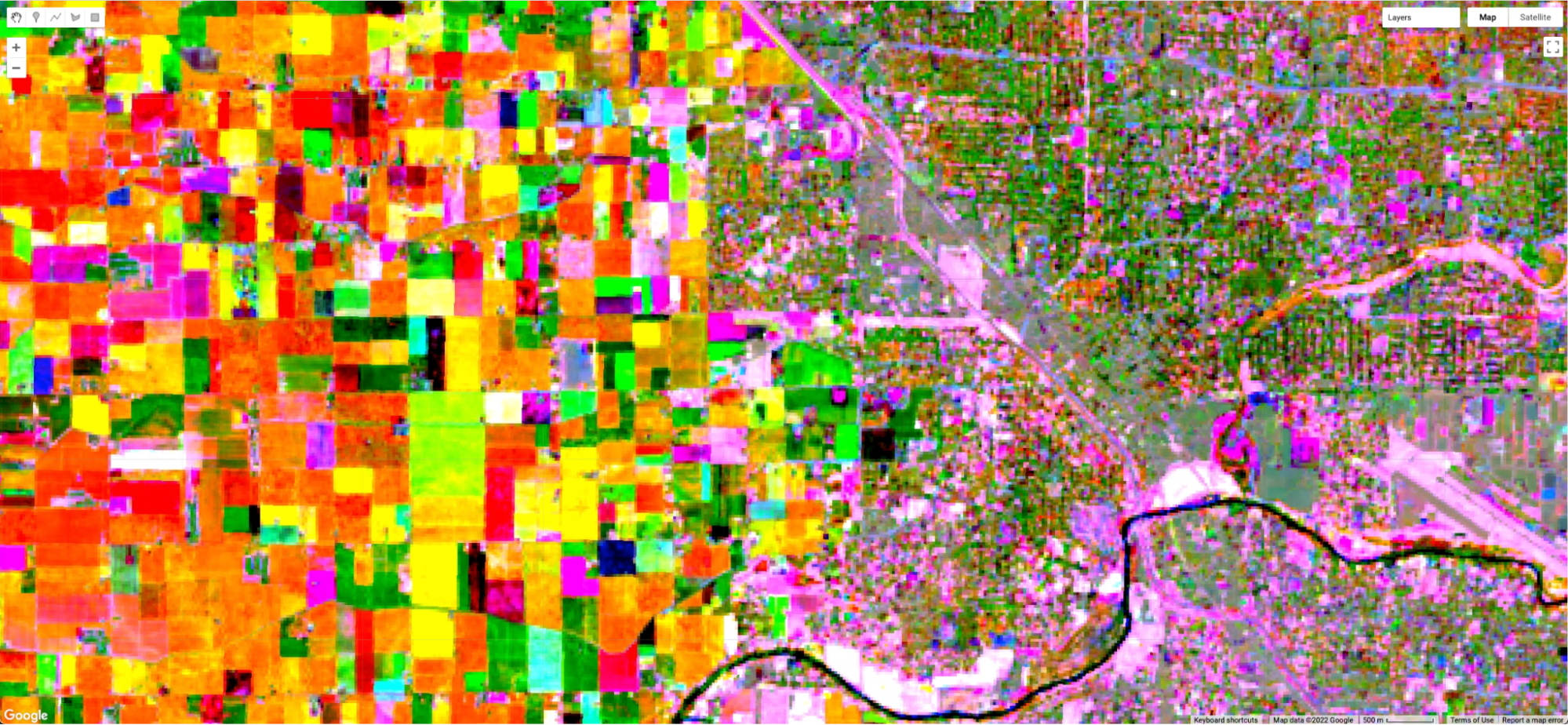

You should see an image with systematic gaps like the one shown in Fig. F4.3.6 (remember to turn off the other layers, and zoom in to better see the data gaps). Landsat 7 was launched in 1999, but since 2003, the sensor has acquired and delivered data with data gaps caused by a scan line corrector (SLC) failure. Without an operating SLC, the sensor’s line of sight traces a zig-zag pattern along the satellite ground track, and, as a result, the imaged area is duplicated and some areas are missed. When the Level 1 data are processed, the duplicated areas are removed, leaving data gaps (Fig. F4.3.7). For more information about Landsat 7 and SLC error, please refer to the USGS Landsat 7 page. However, even with the SLC error, we can still use the Landsat 7 data in our composite. Now, let’s combine the Landsat 7 and 8 collections.

Since Landsat 7 and 8 have different band designations, first we create a function to rename the bands from Landsat 7 to match the names used for Landsat 8 and map that function over our Landsat 7 collection.

// Since Landsat 7 and 8 have different band designations,

// let's create a function to rename L7 bands to match to L8.

function rename(image) { return image.select(

['SR_B1', 'SR_B2', 'SR_B3', 'SR_B4', 'SR_B5', 'SR_B7'],

['SR_B2', 'SR_B3', 'SR_B4', 'SR_B5', 'SR_B6', 'SR_B7']);

}

// Apply the rename function.

var landsat7FiltMaskedRenamed = landsat7FiltMasked.map(rename);If you print the first images of both the landsat7FiltMasked and landsat7FiltMaskedRenamed collections (Fig. F4.3.8), you will see that the bands got renamed, and not all bands got copied over (SR_ATMOS_OPACITY, SR_CLOUD_QA, SR_B6, etc.). To copy these additional bands, simply add them to the rename function. You will need to rename SR_B6 so it does not have the same name as the new band 5.

Now we merge the two collections using the merge function for ImageCollection and mapping over a function to cast the Landsat 7 input values to a 32-bit float using the toFloat function for consistency. To merge collections, the number and names of the bands must be the same in each collection. We use the select function (Chap. F1.1) to select the Landsat 8 bands to be the same as Landsat 7’s. When creating the new Landsat 7 and 8 composite, if we did not select these 6 bands, we would get an error message for trying to composite a collection that has 6 bands (Landsat 7) with a collection that has 19 bands (Landsat 8).

// Merge Landsat collections.

var landsat78 = landsat7FiltMaskedRenamed

.merge(landsat8FiltMasked.select(

['SR_B2', 'SR_B3', 'SR_B4', 'SR_B5', 'SR_B6', 'SR_B7']))

.map(function(img) { return img.toFloat();

});

print('Merged collections', landsat78);Now we have a collection with about 1000 images. Next, we will take the median of the values across the ImageCollection.

// Create Landsat 7 and 8 image composite and add to the Map.

var landsat78composite = landsat78.median().clip(country);

Map.addLayer(landsat78composite, visParams, 'L7 and L8 composite');Comparing the composite generated considering both Landsat 7 and 8 to the Landsat 8-only composite, it is evident that there is a reduction in the amount of data gaps in the final result (Fig. F4.3.9). The resulting Landsat 7 and 8 image composite still has data gaps due to the presence of clouds and Landsat 7’s SLC-off data. You can try setting the center of the map to the point with latitude 3.6023 and longitude −75.0741 to see the inset example of Fig. F4.3.9.

Code Checkpoint F43b. The book’s repository contains a script that shows what your code should look like at this point.

Best-Available-Pixel Compositing Earth Engine Application

This section presents an Earth Engine application that enables the generation of annual best-available-pixel (BAP) image composites by globally combining multiple Landsat sensors and images: GEE-BAP. Annual BAP image composites are generated by choosing optimal observations for each pixel from all available Landsat 5 TM, Landsat 7 ETM+, and Landsat 8 OLI imagery within a given year and within a given day range from a specified acquisition day of year, in addition to other constraints defined by the user. The data accessible via Earth Engine are from the USGS free and open archive of Landsat data. The Landsat images used are atmospherically corrected to surface reflectance values. Following White et al. (2014), a series of scoring functions ranks each pixel observation for (1) acquisition day of year, (2) cloud cover in the scene, (3) distance to clouds and cloud shadows, (4) presence of haze, and (5) acquisition sensor. Further information on the BAP image compositing approach can be found in Griffiths et al. (2013), and detailed information on tuning parameters can be found in White et al. (2014).

Code Checkpoint F43c. The book’s repository contains information about accessing the GEE-BAP interface and its related functions.

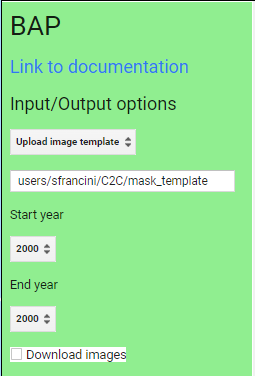

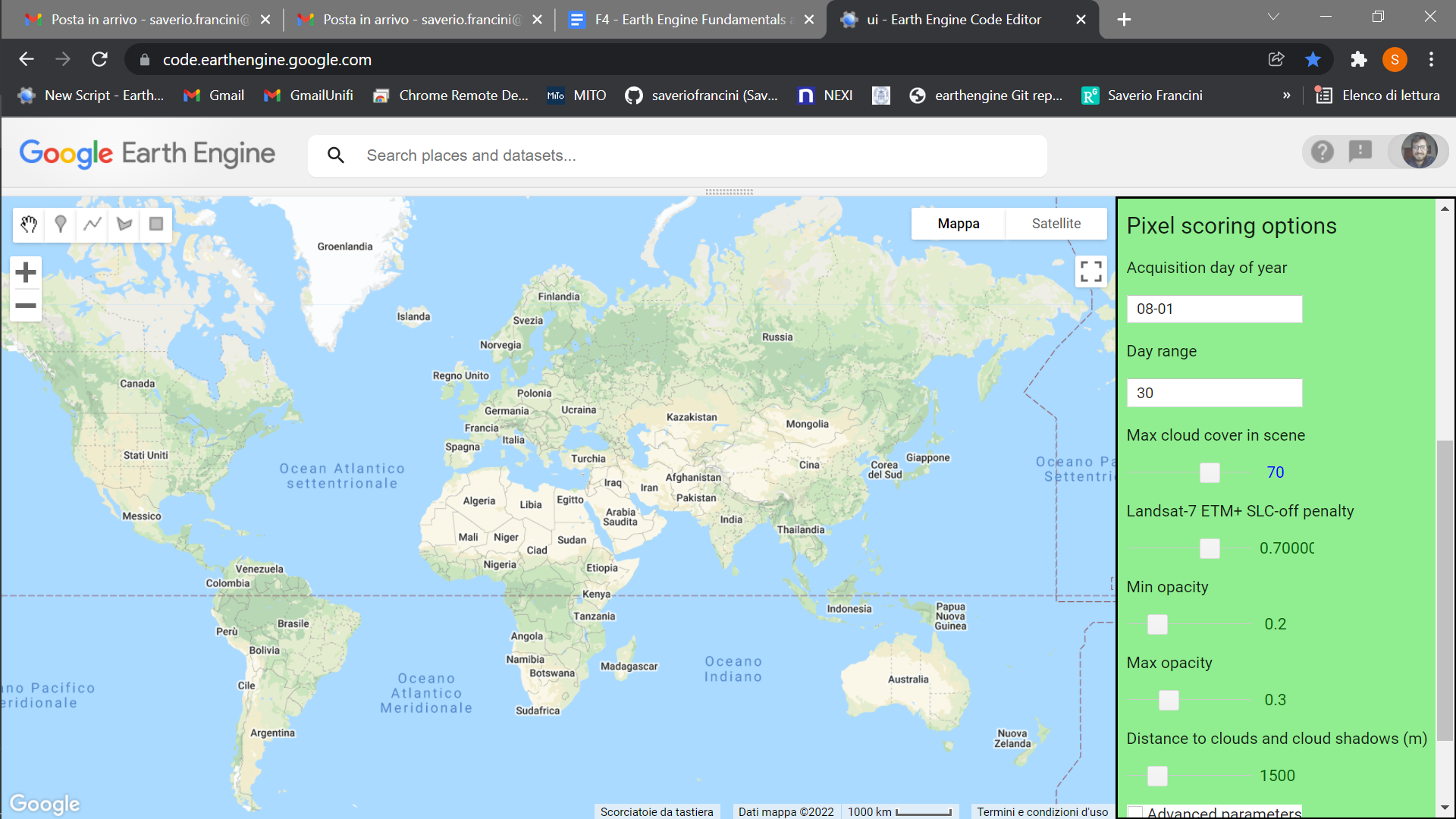

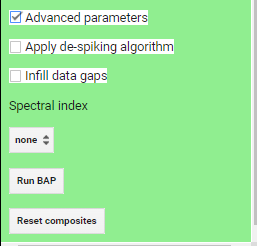

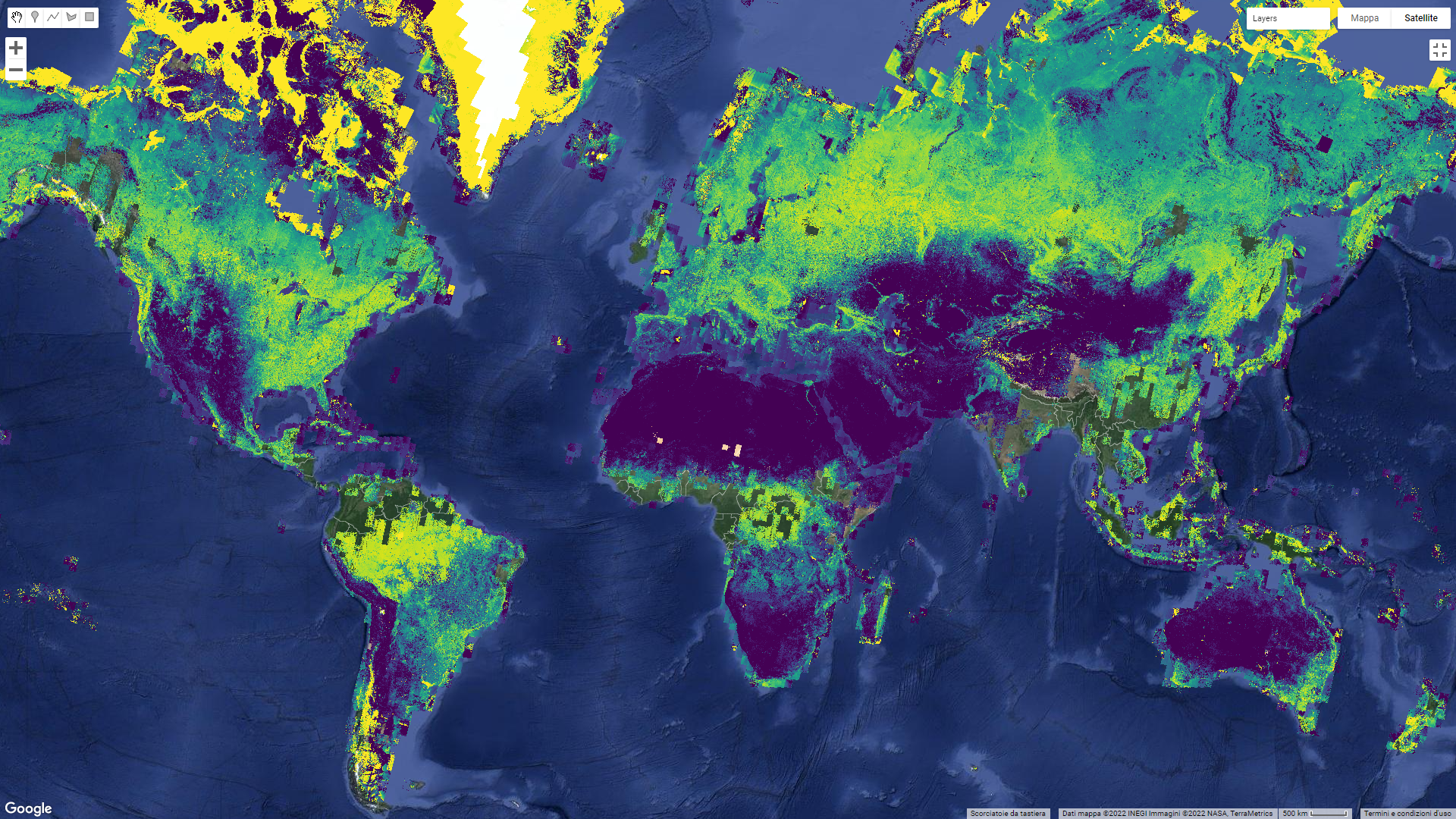

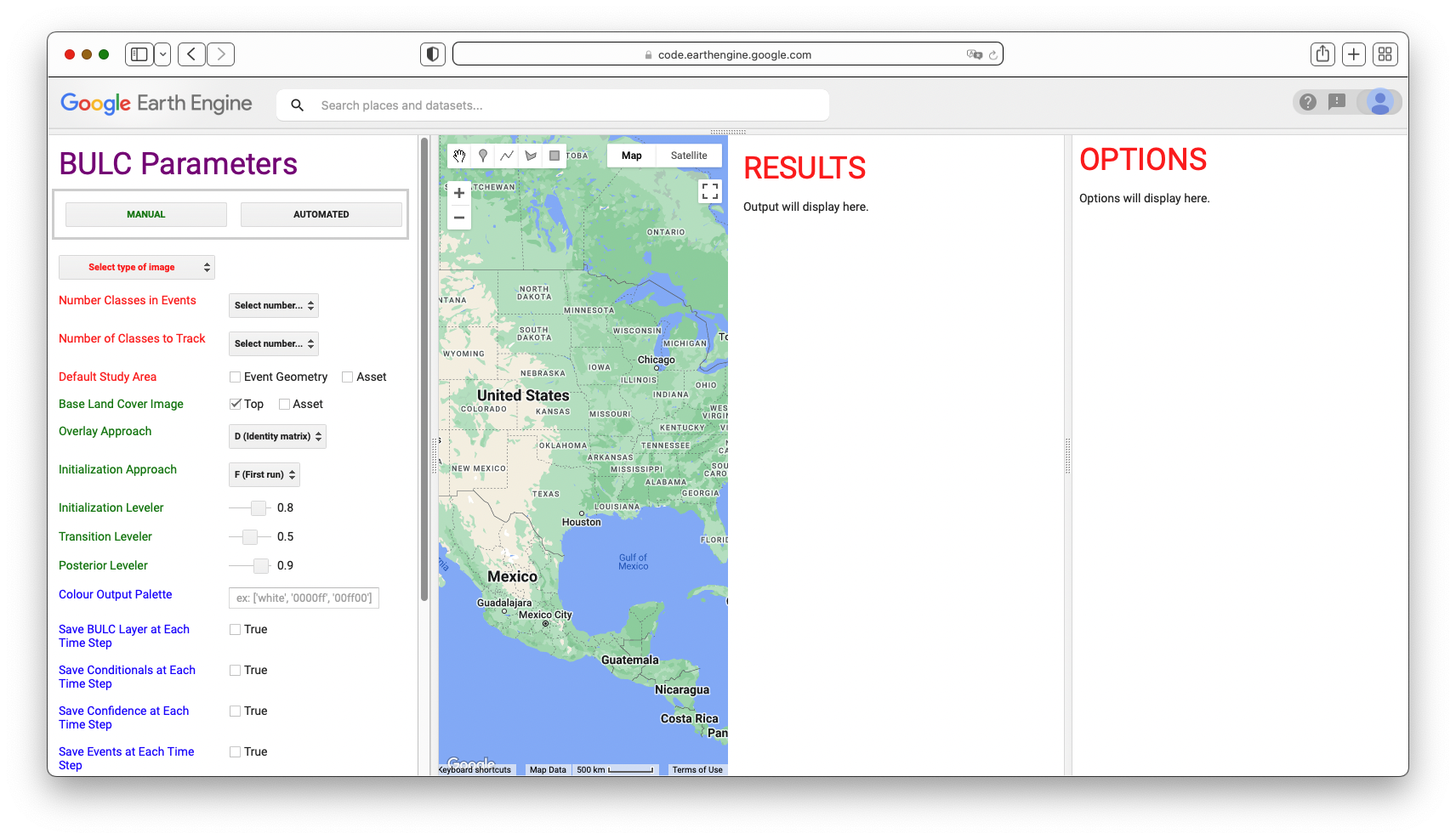

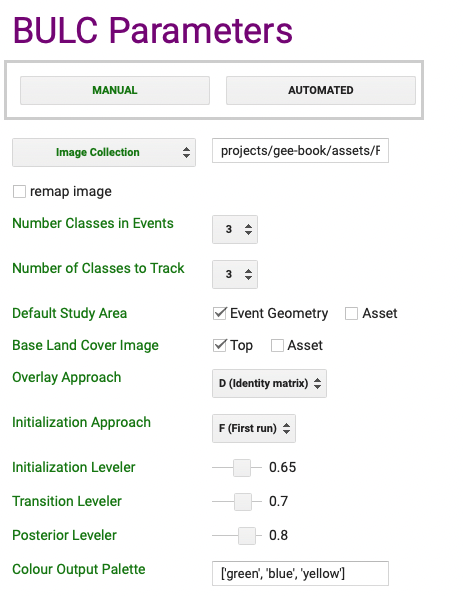

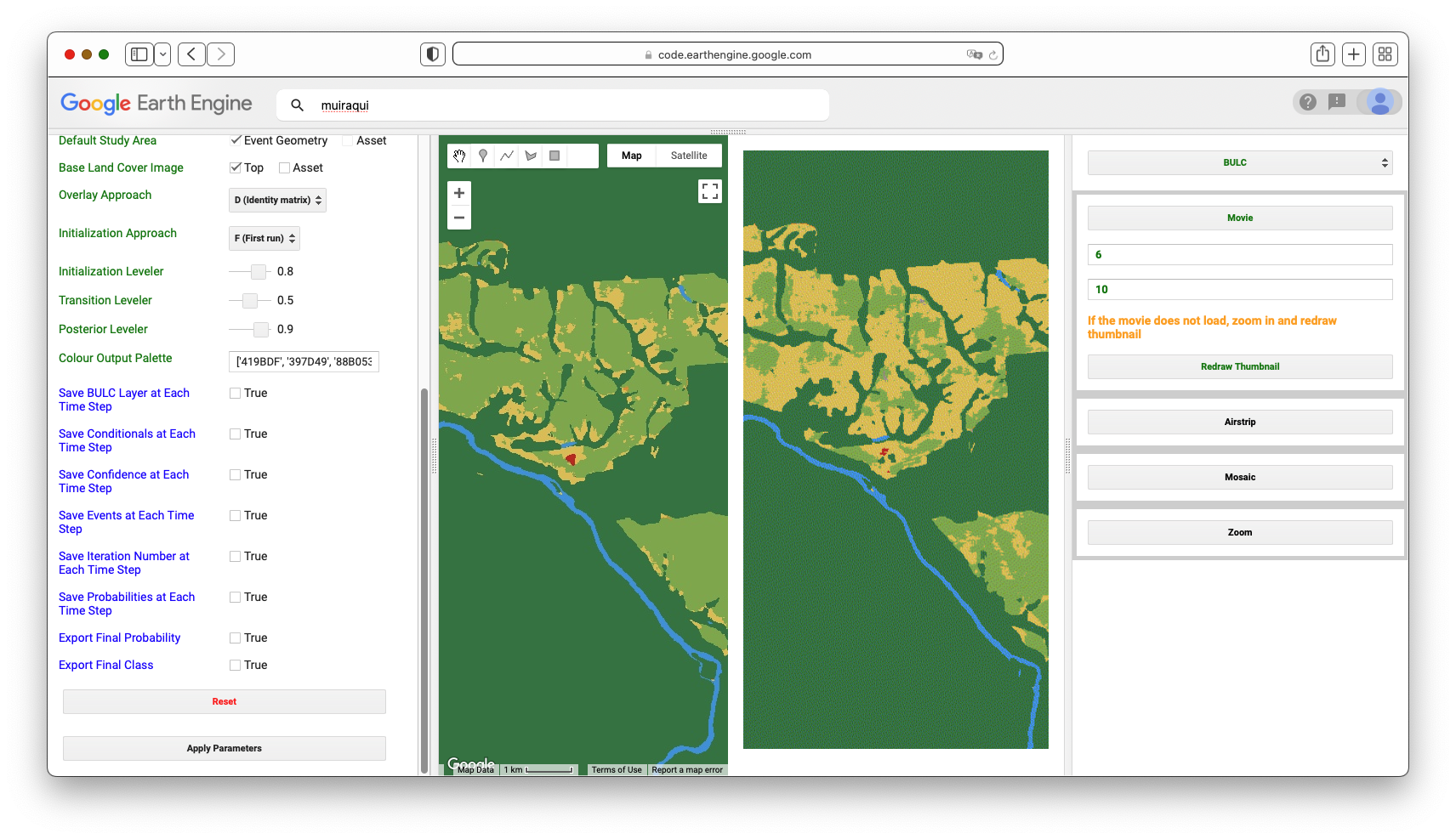

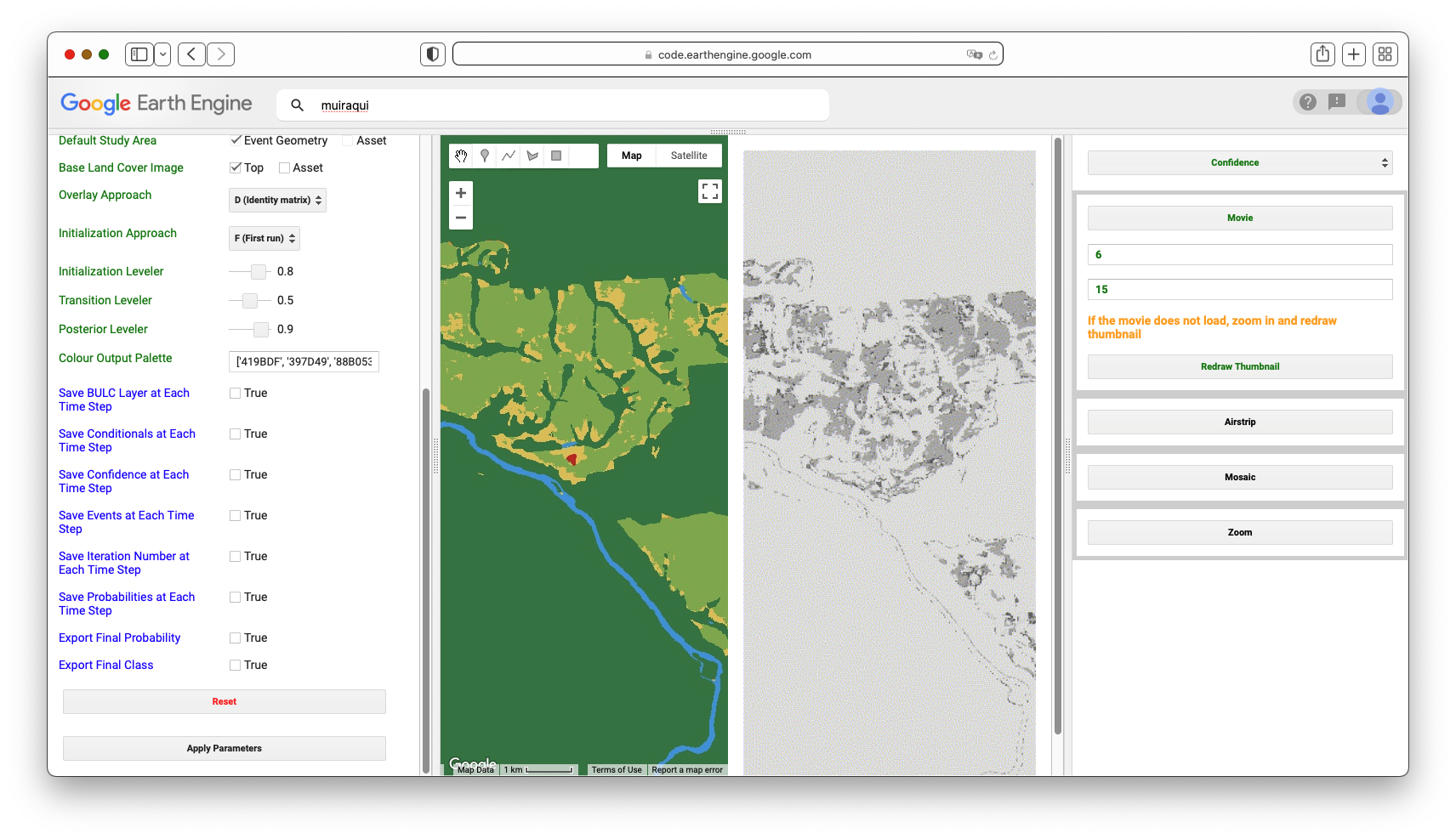

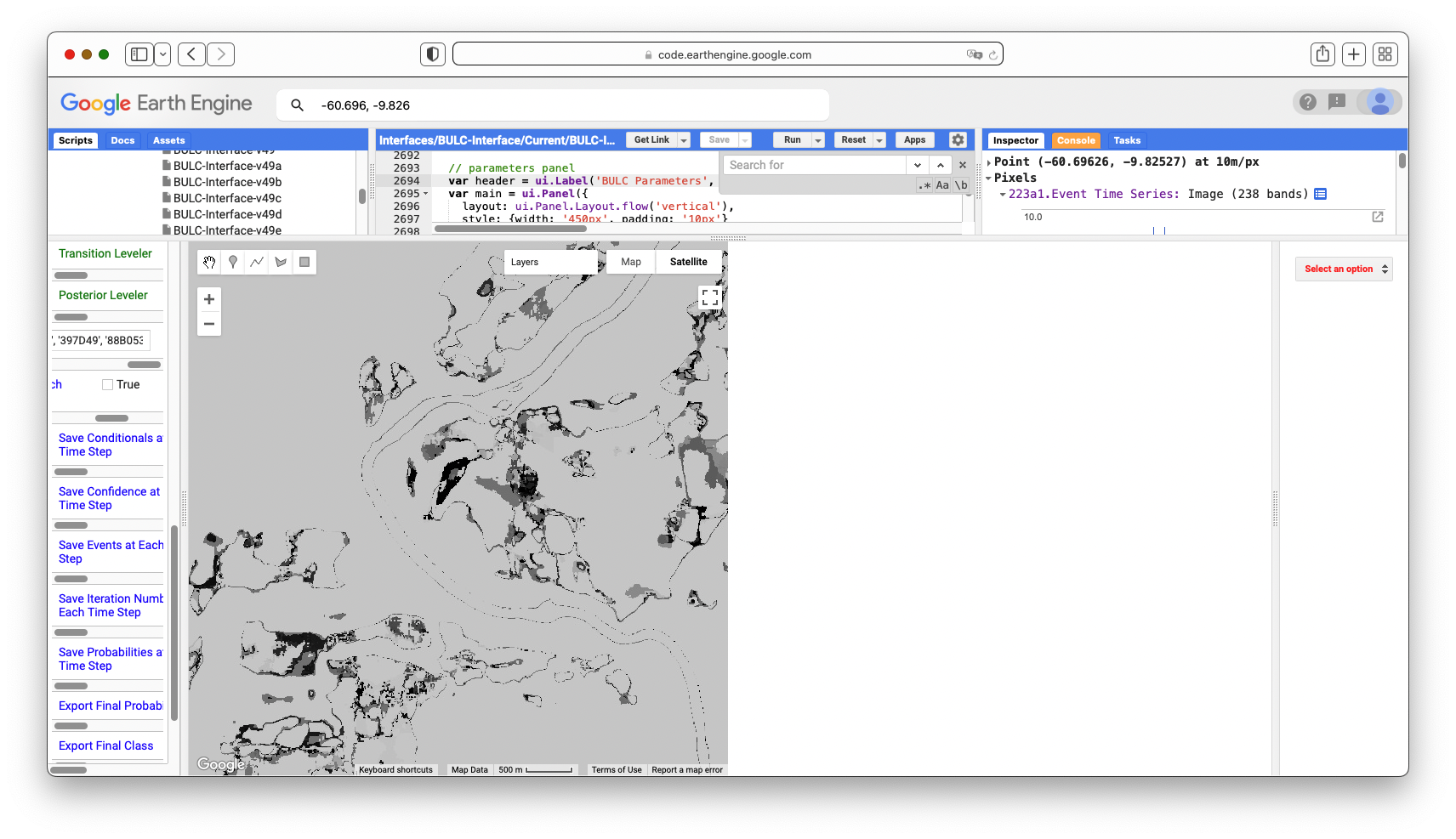

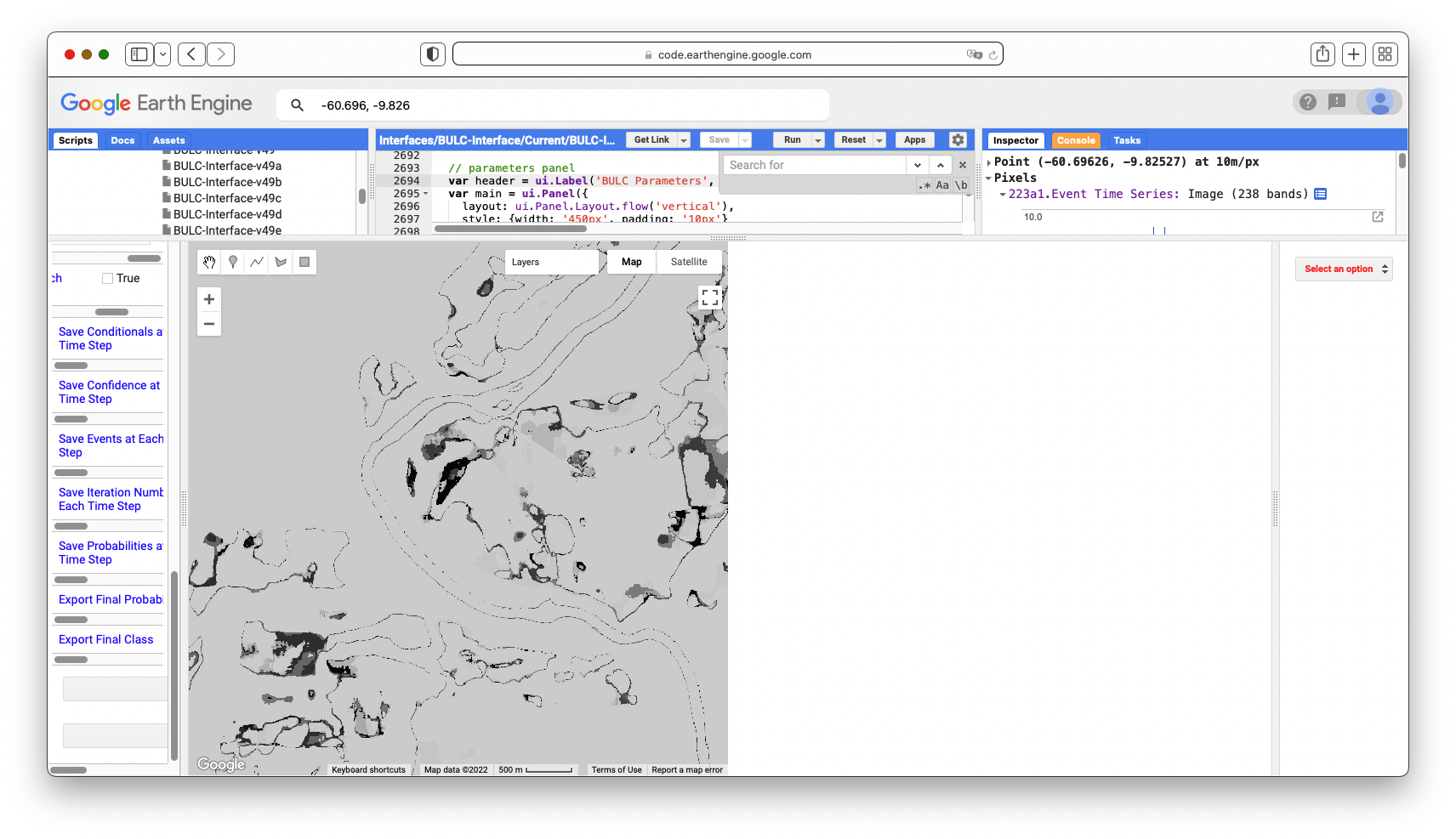

Once you have loaded the GEE-BAP interface (Fig. F4.3.10) using the instructions in the Code Checkpoint, you will notice that it is divided into three sections: (1) Input/Output options, (2) Pixel scoring options, and (3) Advanced parameters. Users indicate the study area, the time period for generating annual BAP composites (i.e., start and end years), and the path to store the results in the Input/Output options. Users have three options to define the study area. The Draw study area option uses the Draw a shape and Draw a rectangle tools to define the area of interest. The Upload image template option utilizes an image template uploaded by the user in TIFF format. This option is well suited to generating BAP composites that match the projection, pixel size, and extent to existing raster datasets. The Work globally option generates BAP composites for the entire globe; note that when this option is selected, complete data download is not available due to the Earth’s size. With Start year and End year, users can indicate the beginning and end of the annual time series of BAP image composites to be generated. Multiple image composites are then generated—one composite for each year—resulting in a time series of annual composites. For each year, composites are uniquely generated utilizing images acquired on the days within the specified Date range. Produced BAP composites can be saved in the indicated (Path) Google Drive folder using the Tasks tab. Results are generated in a tiled, TIFF format, accompanied by a CSV file that indicates the parameters used to construct the composite.

As noted, GEE-BAP implements five pixel scoring functions: (1) target acquisition day of year and day range, (2) maximum cloud coverage per scene, (3) distance to clouds and cloud shadows, (4) atmospheric opacity, and (5) a penalty for images acquired under the Landsat 7 ETM+ SLC-off malfunction. By defining the Acquisition day of year and Day range, those candidate pixels acquired closer to a defined acquisition day of year are ranked higher. Note that pixels acquired outside the day range window are excluded from subsequent composite development. For example, if the target day of year is defined as “08-01” and the day range as “31,” only those pixels acquired between July 1 and August 31 are considered, and the ones acquired closer to August 1 will receive a higher score.

The scoring function Max cloud cover in scene indicates the maximum percentage of cloud cover in an image that will be accepted by the user in the BAP image compositing process. Defining a value of 70% implies that only those scenes with less than or equal to 70% cloud cover will be considered as a candidate for compositing.